With the growing adoption of AI agents and LLM-powered applications, securing the communication layer between these systems has become critical.

Today, we're introducing two new Zuplo policies designed specifically to protect endpoints used by AI agents, LLMs and MCP servers: Prompt Injection Detection and Secret Masking.

These policies work seamlessly with our recently launched remote MCP server support, but they're equally valuable for any API endpoint that interfaces with LLMs or AI agents.

Want to see these policies in action with a remote MCP server and OpenAI? See the video below!

Why These Policies Matter

AI agents often process user-generated content and make API calls based on that input. This creates two primary security risks:

- Prompt injection attacks where malicious users attempt to manipulate the agent's behavior through crafted input

- Secret exposure where sensitive information like API keys or tokens might be inadvertently sent to downstream services

Prompt Injection Detection Policy

The Prompt Injection Detection policy uses a lightweight agentic workflow to analyze outbound content for potential prompt poisoning attempts.

By default, it uses OpenAI's API with the gpt-3.5-turbo model, but it will

work with any service that has an OpenAI-compatible API, as long as the model

supports tool calling. This includes models you host yourself,

Ollama if you're developing locally, or models hosted on

other services such as Hugging Face.

Normal content passes through unchanged:

Malicious injection attempts are blocked with a 400 response:

This rejection would cause a tool call to fail, but you could also intercept the rejection and return more specific errors and reasoning using Zuplo's Custom Code Outbound policy.

Secret Masking Policy

The Secret Masking policy automatically redacts sensitive information from outbound requests, preventing accidental exposure to downstream consumers.

This is particularly important when AI agents have access to sensitive data that shouldn't be transmitted to external services.

The policy automatically masks common secret patterns:

- Zuplo API keys (

zpka_xxx) - GitHub tokens and Personal Access Tokens (

ghp_xxx) - Private key blocks (

BEGIN PRIVATE KEY...END PRIVATE KEY)

You can also define custom masking patterns using the additionalPatterns

option.

The pattern "\\b(\\w+)=\\w+\\b" in the configuration example below looks for

key-value pairs in the format key=value where both the key and value consist

of word characters. This would mask patterns like password=secret123 or

token=abc456.

Configuration

Using Both Policies Together

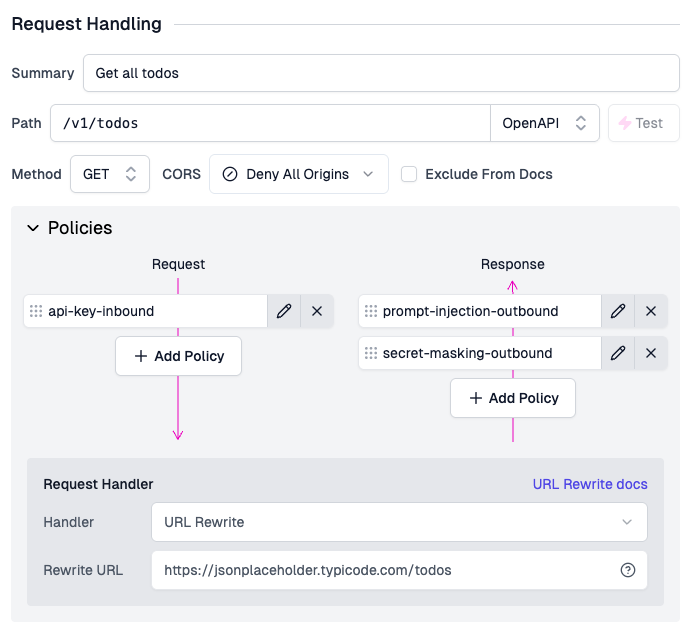

These policies complement each other perfectly. Here's how to configure them together on an MCP server route:

This configuration ensures that:

- Sensitive secrets are masked before being sent to your MCP server

- Any prompt injection attempts are detected and blocked

- Your AI agents can safely process user content without security risks

The same can be setup as outbound policies for the response of any route in the Zuplo portal, as shown below:

Beyond MCP Servers

While these policies work great with MCP servers, they're valuable for any API endpoint that handles AI agent traffic. Consider applying them to:

- Webhook endpoints that receive user-generated content

- API routes that forward data to LLM services

- Integration endpoints that bridge user input with AI systems

These new policies provide essential security layers for AI-powered applications, helping you build robust and secure agent workflows with confidence.

Have thoughts on this topic? Want to talk to us about our new remote MCP Server support in Zuplo? Join us in the #mcp channel of our Discord. We'd love to hear from you!

More from MCP Week

This article is part of Zuplo's MCP Week. A week dedicated to Model Context Protocol, AI, LLMs and, of course, APIs centered around the release of our support for remote MCP servers.

You can find the other articles and videos from this week below:

- Day 1: Why MCP Won't Kill APIs with Kevin Swiber

- Day 2: Zuplo launches remote MCP Servers for your APIs!

- Day 3: The AI Agent Reality Gap with Zdenek "Z" Nemec (Superface)

- Day 4: Two Essential Security Policies for MCP & AI with Martyn Davies

- Day 5: AI Agents Are Coming For Your APIs with John McBride