Model Context Protocol (MCP) servers are becoming a critical component of AI-powered applications, enabling seamless integration between AI agents and various data sources. However, as these servers handle user-generated content that flows directly to downstream LLMs, they introduce a significant security risk: prompt injection attacks.

What is Prompt Injection?

Prompt injection occurs when malicious input manipulates an AI model's behavior by embedding instructions within seemingly innocent content. Unlike traditional injection attacks that target databases or systems, prompt injection exploits the conversational nature of LLMs.

Consider this seemingly harmless API response from an MCP server:

When a downstream AI agent processes this content, the embedded instruction could override the agent's original purpose, potentially causing data leaks or unauthorized actions.

Why MCP Servers Are Prime Targets

MCP servers are particularly vulnerable because they:

- Process user-generated content from various sources

- Return data that's directly consumed by AI agents

- Often operate as trusted intermediaries in AI workflows

- May aggregate content from multiple, potentially compromised sources

A successful prompt injection through an MCP server can compromise the entire AI agent's behavior, not just a single interaction.

Detection and Prevention

Effective prompt injection detection requires analyzing outbound content before it reaches downstream AI agents. This involves:

- Content Analysis: Using specialized models to identify potential injection patterns

- Context Evaluation: Understanding how content might influence downstream AI behavior

- Real-time Blocking: Preventing malicious content from reaching AI agents

Implementing Protection with Zuplo

Zuplo provides a built-in Prompt Injection Detection Policy that uses a small agentic workflow to analyze outbound content.

Basic Configuration

This policy can be added in the policies.json file in any Zuplo project.

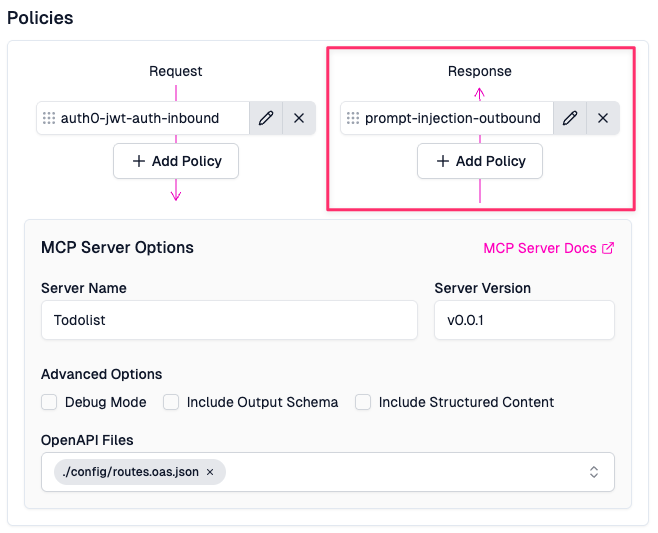

It can also be added in the browser-based Zuplo Portal, from the policy picker on the Response Policies section of your MCP (or any other) route.

MCP Server Integration

For MCP servers (or other API routes), apply the policy to your outbound routes:

Local Development

This policy can also be tested locally. We recommend using Ollama and running a lightweight model that supports tool calling and the OpenAI API format.

You can change the Prompt Injection Detection policy options to reflect your local setup, like so:

Strict vs. Permissive Mode

The policy offers two operational modes:

- Permissive (

strict: false): Allows content through if detection fails - Strict (

strict: true): Blocks all content when detection is unavailable

Choose strict mode for high-security environments where false positives are preferable to potential injections.

Best Practices

- Use capable models: Ensure your detection model supports tool calling (GPT-3.5-turbo, GPT-4, Llama 3.1, Qwen3)

- Balance speed vs. accuracy: Smaller models are faster but may miss sophisticated attacks

- Monitor detection rates: Track false positives and negatives to tune your setup

- Layer your defenses: Combine detection with other protections such as input validation and output sanitization

Zuplo has many inbound and outbound policies that pair well with Prompt Injection Detection, such as Secret Masking, and our Web Bot Auth policy.

Conclusion

As MCP servers become integral to AI applications, protecting them from prompt injection attacks is crucial for maintaining system security and reliability. With proper detection mechanisms in place, you can safely leverage the power of MCP while protecting downstream AI agents from manipulation.

Implementing prompt injection detection doesn't just protect your AI agents, it builds trust with users and ensures your AI-powered applications behave as intended.

Try It For Free

Ready to implement an MCP Server for your API and protect it with Prompt Injection Detection? You can get started by registering for a free Zuplo account.