If you're building applications that call LLMs or handle natural language queries, you've probably noticed a familiar problem: users ask the same thing in slightly different ways, and each variation triggers a fresh (and potentially expensive) request to your backend.

Traditional caching won't help here because it relies on exact matches. "What's the capital of France?" and "Tell me France's capital city" are treated as completely different requests, even though they deserve the same response.

Semantic caching solves this by understanding what users mean, not just what they type.

What is Semantic Caching?

Semantic caching stores and retrieves responses based on the meaning of requests rather than their exact text. Instead of comparing strings character-by-character, it uses embeddings (vector representations of text) to measure how similar two requests are conceptually.

When a new request comes in, the cache checks whether any stored requests are semantically similar. If the similarity score exceeds a configured threshold, the cached response is returned. If not, the request proceeds to your backend, and the new response gets cached for future matches.

This approach is particularly powerful for AI applications where users naturally phrase the same intent in many different ways.

Why Should You Use it?

Semantic caching delivers three key benefits for APIs.

Cost reduction: LLM API calls are expensive. When semantically similar requests return cached responses, you avoid paying for redundant inference. For applications with common request patterns, this can dramatically reduce your AI spend.

Faster response times: Cached responses return in milliseconds rather than the seconds an LLM typically takes. Users and downstream services get instant responses for requests similar to ones already processed.

Reduced backend load: Fewer requests reaching your LLM provider or compute-intensive backend means less strain on rate limits and quotas. This becomes especially valuable during traffic spikes.

When Does Semantic Caching Make Sense?

Semantic caching works best when requests that differ in phrasing should return the same result.

Customer support chatbots are a common example. Users ask "How do I reset my password?" in dozens of ways: "I forgot my password," "Can't log in, need to change password," "Where's the password reset option?" All of these could share a cached response.

Search and recommendation APIs also benefit. Product searches like "comfortable running shoes" and "good shoes for jogging" might return identical results, so caching the first response saves compute on the second.

Content classification and tagging services often receive similar inputs. An API that categorizes support tickets might see "Payment failed on checkout" and "Checkout payment error" as functionally identical, making them good candidates for a shared cached response.

Text transformation APIs that summarize, translate, or reformat content can cache results when input text is semantically equivalent, even if whitespace, punctuation, or minor wording differs.

RAG (retrieval-augmented generation) pipelines frequently process similar queries. Caching at the query embedding stage prevents redundant document retrieval and generation for requests that would return the same context.

Semantic caching is less useful when every request is truly unique, when response freshness is critical (like real-time data), or when small phrasing differences should produce different responses.

Implementing Semantic Caching with Zuplo

Zuplo provides semantic caching in two ways, depending on how, and what, you're building.

Semantic Cache Policy

For API Gateway projects, Zuplo offers a Semantic Cache Policy that you can attach to any route.

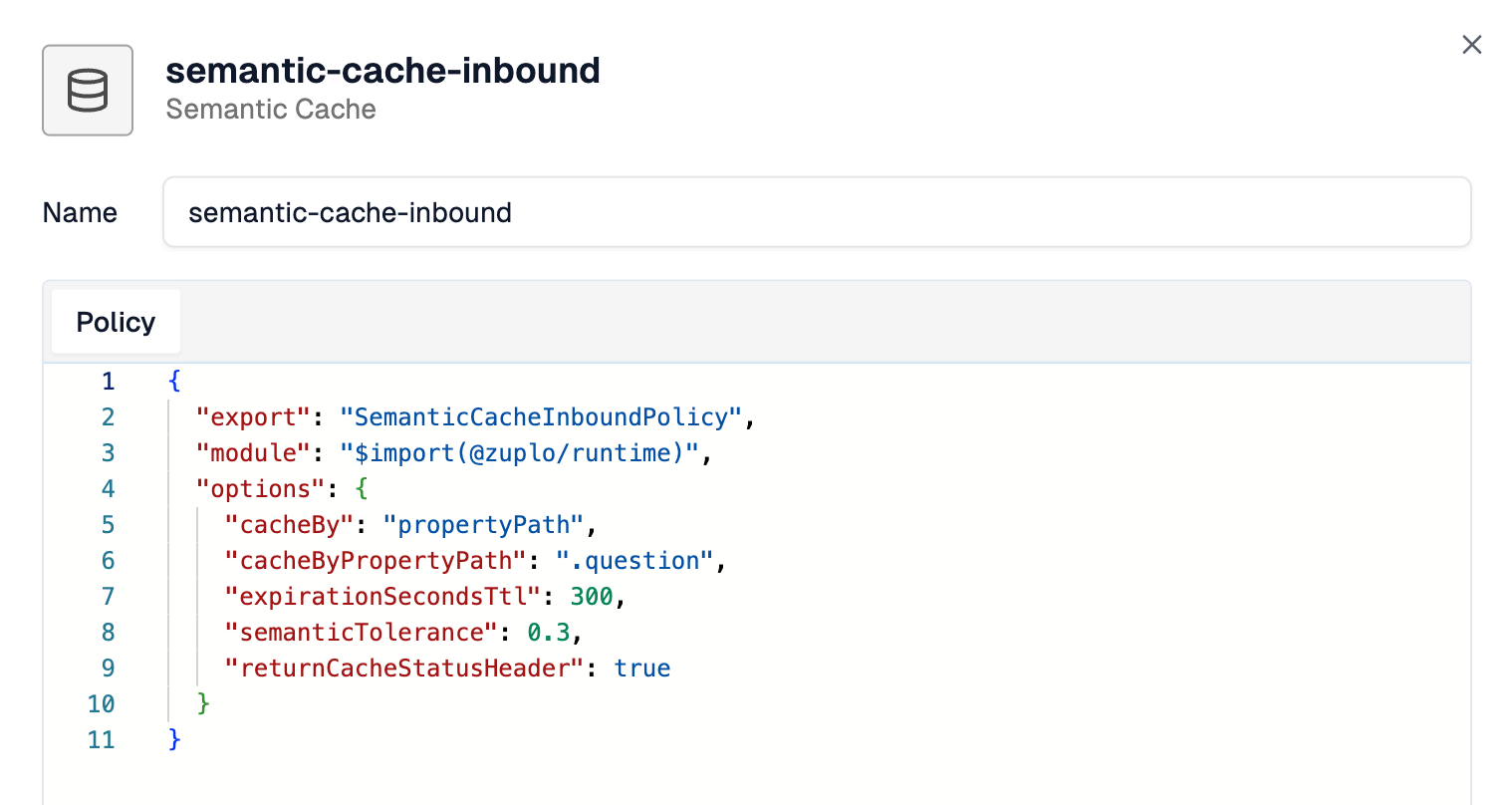

The policy extracts a cache key from your request (typically from the request body), checks for semantically similar cached entries, and either returns the cached response or lets the request proceed.

Configuration is straightforward. You specify how to extract the cache key, set a semantic tolerance (how similar requests need to be for a cache hit), and define a TTL for cached entries.

The tolerance setting controls matching strictness on a 0-1 scale, where lower values require closer semantic matches and higher values allow more flexible matching.

AI Gateway with Built-in Semantic Caching

For teams using LLMs in production, Zuplo's AI Gateway includes semantic caching as a built-in feature. When creating an application in the AI Gateway, you can enable semantic caching with a single toggle. The gateway handles the embedding generation, similarity matching, and cache management automatically.

This approach pairs semantic caching with other AI Gateway capabilities like cost controls, team budgets, provider abstraction, and security guardrails. You get a complete solution for managing LLM access across your organization.

Try an Example

Semantic Caching Example

A working example that demonstrates cache hits and misses with semantically similar queries. Deploy it or run locally to test different semantic tolerances.

Conclusion

Semantic caching won't make sense for every API. But for applications where users naturally ask similar questions in different ways, it's a straightforward way to reduce costs, improve performance, and deliver a better experience.

Next Steps

If you're already using Zuplo, adding semantic caching is a matter of configuring the policy on your routes or enabling it in your AI Gateway app settings.

If you're new to Zuplo, the semantic caching example is a good starting point. From there, you can explore the Semantic Cache Policy documentation for advanced configuration options, or check out the AI Gateway documentation if you're working with LLM providers and want the full suite of management features.