The $134 billion AI software market has created a gold rush for companies that can package and sell AI capabilities through APIs. Companies like OpenAI and Anthropic have shown how to turn complex machine learning models into accessible services that developers can integrate with just a few lines of code. In turn, they've generated millions of dollars in revenue from just monetizing their APIs.

This success has opened opportunities for more companies to monetize their AI models through well-designed APIs. In this article, I'll cover the key components of building secure, flexible AI APIs and implementing effective monetization strategies.

Why APIs are at the Core of Modern AI

APIs (Application Programming Interfaces) are the bridges that connect your AI model to the outside world — your customers, partners, or even your internal apps. Instead of shipping a standalone AI product (ex. ChatGPT) which can be big, complicated, and hard to maintain, you just need to deploy an API endpoint that developers can call to leverage your AI’s magic.

- OpenAI is one of the best-known examples of using API-based AI services. Their API allows developers to integrate advanced language models, such as GPT, into their apps without having to reinvent the AI wheel.

- Archetype similarly offers an API to deliver on-device AI agents that can automate tasks, sense the physical world, and provide real-time insights.

In both cases, the business model is pretty straightforward: customers make calls to the API, and every call (or group of calls) generates revenue. Let’s explore how this can work in more detail—and what considerations you should have in mind as a founder or engineering lead.

Monetization Models

Not all AI APIs are priced the same. We have a few AI customers here at Zuplo, here are a few common pricing strategies that come up when discussing monetizing AI services with them.

1. Usage-Based Pricing (Pay-as-You-Go)

Who uses it? OpenAI, Anthropic

How it works: You charge developers based on the number of requests (API

calls) they make, the volume of data processed, or the computational resources

consumed. In the case of LLM-based APIs, you typically charge based on input and

output tokens.

Why it’s great:

- You can attract a wide range of customers, from hobbyists to large enterprises.

- Revenue scales with usage.

Challenges:

- Predicting revenue can be harder. This can be somewhat mitigated with minimum spend commitments that are coupled with volume-based discounts to incentivize steady or increased usage over time.

- Requires robust usage-tracking and billing systems.

My take: This is the most popular model for AI APIs because it aligns costs with value. You only pay for what you use, which can be great for customers, and it ensures that if your AI takes off, your revenue does, too.

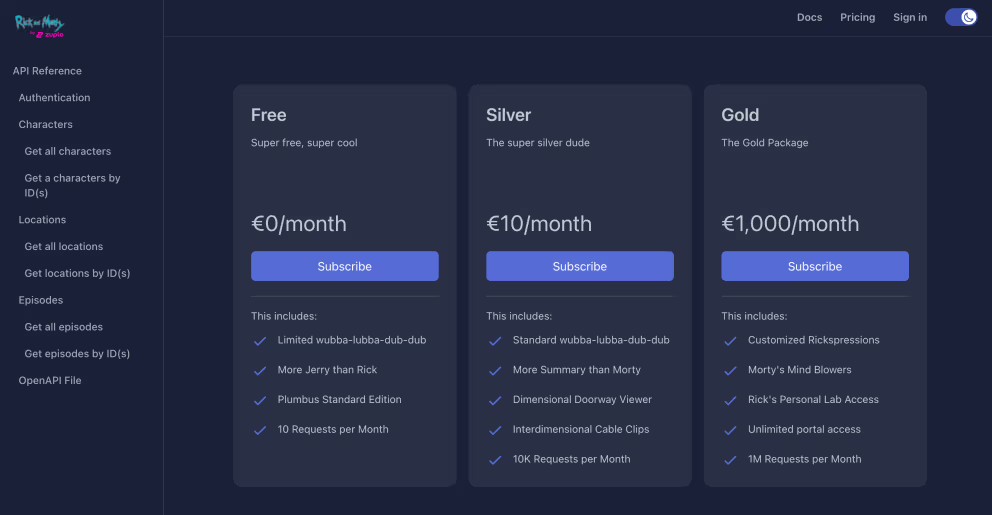

2. Tiered Subscription Plans

Who uses it? Many smaller AI startups and SaaS platforms

How it works: Offer a few pricing tiers (e.g., Free, Pro, Enterprise), each

with fixed usage limits.

Why it’s great:

- Predictable revenue and cost structure.

- Simple for customers to understand.

Challenges:

- You could leave money on the table if your top-tier plan doesn’t account for super-high usage. Sometimes its best to have an open-ended, uncapped enterprise tier that you negotiate with the company directly.

- Harder to fine-tune for different workloads or specialized use cases. You might be able to make your plans more flexible by introducing overages.

My take: Tiered subscriptions are a solid approach if your AI product has well-defined tiers (e.g., number of predictions per month). But you need to keep an eye on usage so you don’t inadvertently over-serve heavy users without proper billing infrastructure in place. We'll cover how to build a scalable AI model API further down in the article.

3. Enterprise Contracts and Custom Solutions

Who uses it? Large AI providers looking to serve Fortune 500 clients

How it works: Negotiate custom contracts with big companies based on

specific usage levels, dedicated support, and specialized features.

Why it’s great:

- Bigger, more stable revenue per deal.

- You can co-develop specialized AI features (ex. vertical-specific fine-tuned versions of your model).

Challenges:

- Long sales cycles.

- High demands on your product and support teams.

My take: Enterprise deals can be lucrative but require more time and effort. If you’re just starting out, I recommend having a straightforward usage-based or tiered option first, then layering on an enterprise plan as you grow. Taking on a big customer when you're not ready can potentially kill your startup if the deal goes south.

Key Tools for Building and Monetizing AI APIs

Here's tools that I've seen and read that are being used to build and monetize AI APIs.

1. Cloud Hosting and Infrastructure

- AWS (SageMaker, EC2, Bedrock), GCP (Vertex AI), Azure (Machine Learning) all provide managed services for training and deploying AI models.

- Pro tip: If you’re dealing with super-high throughput or real-time inference, you might need specialized GPU hosting or on-prem solutions.

2. API Management and Gateway

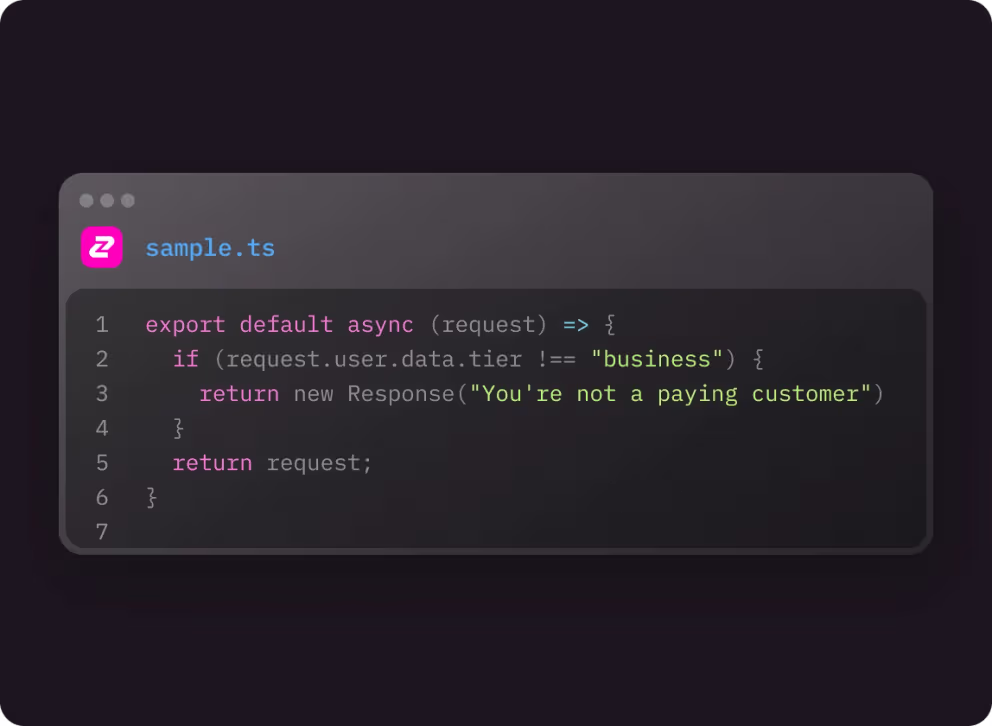

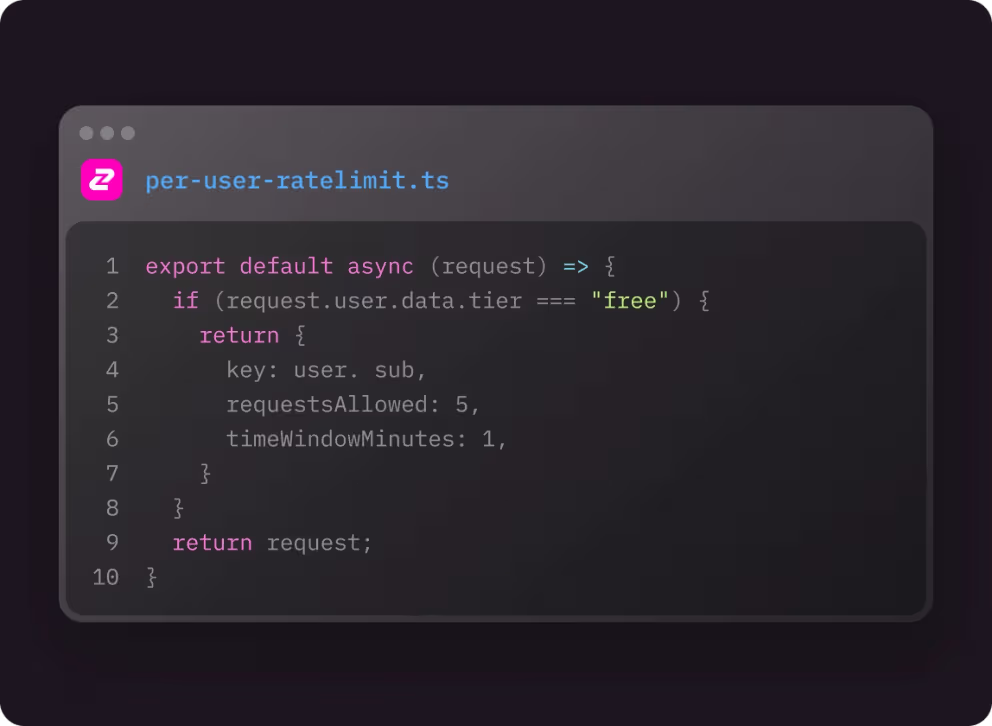

- You'll want an API gateway and management tool from day 1 to build well-designed and reliable API. Companies like DeepSeek AI had several production issues scaling their API. Tools like Zuplo or Apigee help you set up authentication, rate-limiting, usage tracking, and metering/monetization logic.

- Pro tip: If you plan to have a usage-based billing structure, you’ll need fine-grained analytics. Using an API gateway with built-in usage monitoring can save you a ton of headaches.

Here's a quick video tutorial of implementing API monetization in 20 minutes with Zuplo:

3. Billing and Payments

- This is likely the most complicated and sensitive piece of your infrastructure so don't build this yourself. Stripe and Paddle can handle payments and subscriptions. Check out my billing provider guide to understand how each works.

- For usage-based billing, you’ll need to integrate your usage metrics with your billing tool - Stripe and Paddle should both support this.

- Pro tip: Make sure to test your billing flows thoroughly—you don’t want a fiasco where you accidentally under- or over-charge your customers.

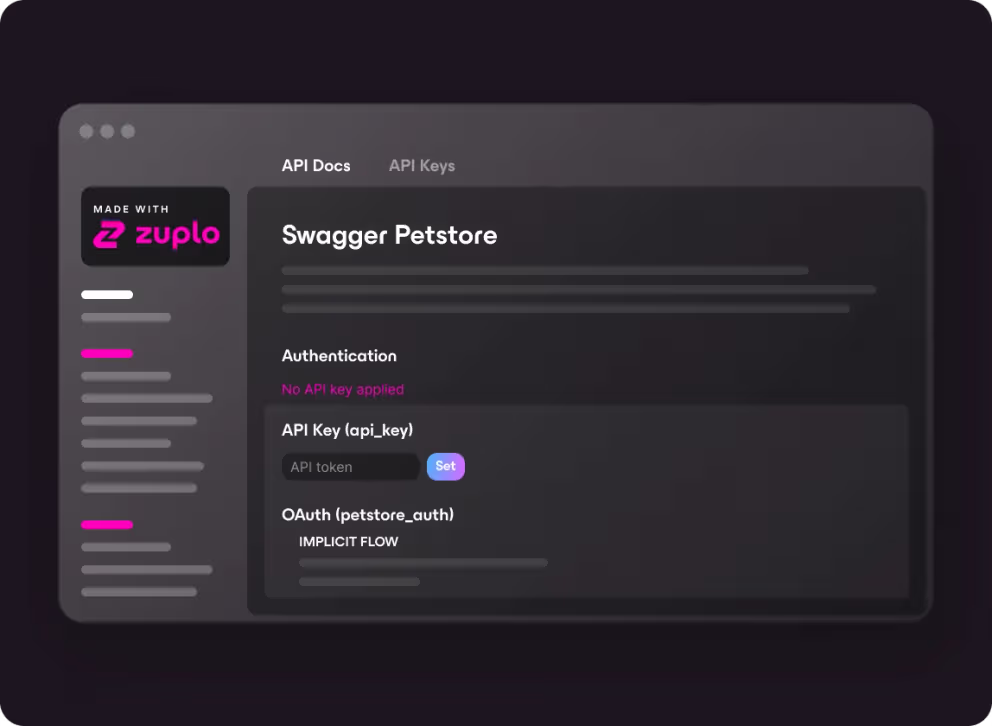

4. Documentation and Developer Experience

- Tools like Zudoku, Scalar, or Mintlify can help you create great documentation. Some gateways actually generate a developer portal which allows you to integrate your gateway's authentication mechanism directly into the onboarding flow.

- Pro tip: A slick dev portal with quick starts, code snippets, and interactive demos can drastically improve adoption.

Building Reliable AI APIs

The foundation of a successful AI API starts with solid infrastructure. You don't want to end up like DeepSeek where you release a massively popular model, but it fails to scale and you can't serve your users. Modern API gateways connect AI models to applications while handling authentication, rate limiting, and monitoring. Here's how to implement these components:

Authentication and Security

Every AI API needs strong authentication. There are several ways to authenticate your API, but the two most common for AI APIs are OAuth 2.0 and API keys. If you were to ask me - I'd recommend using API keys (this is what OpenAI and Gemini use) for several reasons including a quicker onboarding flow. Also ensure you use SSL/TLS encryption secures data in transit.

Even if you authenticate your requests - you will need to introduce API rate limiting to ensure fair usage of your API (and avoid resource starvation if someone DDOS-es you). If you are using a subscription based model, you will still need rate limits for short-term bursts of calls, but will also need to enforce quotas to ensure monthly or annual usage aligns with the user's plan.

Performance and Scale

AI APIs must handle unpredictable load patterns efficiently around the world. Edge deployments distribute API endpoints globally, reducing latency and improving reliability. An edge-deployed gateway automatically scales to accommodate traffic spikes without manual intervention.

A great strategy to couple with edge deployments is caching. We made a walkthrough to caching AI responses you should check out to learn more.

Documentation

Clear documentation may not make your API more reliable, but it can teach users how to use your API properly, and keep them away from common misuse-patterns. OpenAPI specifications standardize endpoint documentation, request/response schemas, and authentication requirements.

Considerations for AI Companies

1. Regulations and Compliance

AI is in the spotlight worldwide. In places like the EU, there’s talk of the AI Act, plus GDPR for data protection. The U.S. has its own patchwork of rules, with California adopting stricter privacy laws.

- Recommendation: Build compliance in from day one. If you handle sensitive data, be mindful of how (and where) it’s stored and processed.

2. Competition and Differentiation

With the rise of AI startups and giants releasing new models, you’ll need to stand out.

- Recommendation: Focus on a niche (e.g., healthcare, finance, manufacturing) or a specialized feature set (like Archetype’s on-device capabilities). Offer direct value for a specific use case.

3. Performance and Reliability

Nothing hurts credibility like a slow or inconsistent API.

- Recommendation: Use caching, load balancing, and auto-scaling. Make sure you have a status page or an SLA (Service-Level Agreement) for enterprise clients.

4. Security and Data Privacy

You’ll need robust authentication and encryption—especially if you’re processing sensitive data (like personal health info or financial data).

- Recommendation: Implement OAuth or token-based auth, encrypt data at rest and in transit, and consider 2FA for critical systems.

Bringing it All Together

Building and monetizing an AI model via an API is simpler in principle than ever, thanks to cloud services, API management platforms (like Zuplo!), and developer-friendly billing systems. But to succeed, you still need to plan carefully.

- Choose a Monetization Strategy that aligns with your target market.

- Invest in Tools (cloud, API gateway, billing) that help you scale quickly and avoid technical debt.

- Stay Ahead of Regulations and keep your AI usage transparent.

- Differentiate your model by solving real problems in a unique way.

At Zuplo, we’ve helped countless startups and enterprises launch APIs that can handle real production traffic, integrate with billing solutions, and remain secure and compliant. I’m a huge believer in usage-based pricing for AI startups — especially if you’re confident your use cases will grow with your customers. But if you prefer flat tiers or enterprise contracts, that can also work with the right planning.

Final Thoughts

As an AI founder or engineering executive, you’re in the driver’s seat of a truly exciting field. Deploying your AI model behind an API is not only practical — it’s often the best way to reach a global developer audience and tap into new revenue streams. By leveraging robust infrastructure, a flexible API gateway, and thoughtful compliance practices, you can create a sustainable business around your AI offerings.

If you’re ready to level up your API game, feel free to check out Zuplo for an approachable, scalable solution that grows with you. We’d love to see what amazing AI solutions you can build, monetize, and share with the world. Good luck, and happy innovating!