Ever wonder if a mechanism exists that could stop API users from hammering your API with traffic? What about enforcing a set amount of API calls based on a monetization tier? This is precisely the role that API rate limiting can play in controlling how your APIs are used. Rate limiting is one of the fundamental elements of API access control and security. Uncontrolled API access can lead to server overload, performance degradation, and potential security risks. This is where API rate limiting becomes essential.

In this blog, we will cover a comprehensive overview of API rate limiting. We will explore its underlying mechanisms, the reasons to use it, and the various methods used to implement it. Lastly, we will show you how to add API rate limiting to your APIs in just a few simple steps. Let's start by digging deeper into the fundamentals of rate limiting.

Table of Contents

- What is API Rate Limiting?

- Why Do You Need API Rate Limiting?

- How Does API Rate Limiting Work?

- Different Methods of Rate Limiting

- How Can Users Handle API Rate Limits?

- Rate Limiting vs. API Throttling

- What Are The Best Practices for API Rate Limiting?

- API Rate Limiting Examples

- How to Implement API Rate Limiting

- Conclusion

What is API Rate Limiting?

When it comes to summing up precisely what API rate limiting is, it is essentially a set of rules or constraints that govern how often an application or user can access an API. It provides a mechanism to monitor and control the flow of incoming requests to an API server.

How does it work? Typically, the simplest forms of rate limiting are implemented by defining static thresholds for the number of requests allowed within a specific time window. For instance, a simple rate limit might be 100 requests per minute per user. If an application exceeds this limit, the API server or API gateway will block any subsequent requests and respond with an error message, usually a 429 Too Many Requests status code. Once the rate limit has reset, in this example, after one minute, the API will begin accepting requests again until the user exceeds 100 requests per minute.

One thing to note is that rate limiting differs from enforcing an API quota. Rate limiting generally covered a smaller allotment of time, such as requests per second, minute, or hour intervals. Quotas, however, are generally used to enforce longer intervals, such as requests per day, week, month, or year. Much of the time, these two mechanisms are used together but are still distinct entities and limits.

Why Do You Need API Rate Limiting?

So, do you really need API rate limiting, and is it THAT crucial to implement? Almost all critical or public APIs have rate limits set for various reasons and benefits. Let's break down the key reasons why you should seriously consider rate limiting APIs:

Preventing Server Overload

When a massive amount of traffic hits your API without rate limiting, your server could get swamped. This could potentially cause slow response times, increased latency, and even complete outages. Rate limiting helps prevent this by controlling the number of requests allowed to come through to the API, keeping the amount of load to an acceptable and expected level for optimal performance of each API request and response.

Protection Against Denial-of-Service (DoS) Attacks

Speaking of server overload, when malicious actors intentionally flood your API

with requests to disrupt your service, this can lead to a denial of service

attack. Sometimes your own customers might DDOS you by accident with a

buggy useEffect in React. Rate

limiting acts as a first defense against such denial of service attacks,

blocking excessive requests from known bad actors or IP addresses. When using an

API gateway, the request doesn't even get to go to the upstream API but is

instead blocked at the API gateway level before it has a chance to disrupt

service.

Ensuring Fair Usage

By setting limits, you create a policy of fair access to API resources and prevent any single user or application from consuming excessive resources and impacting the experience of others. This is particularly important for APIs with tiered pricing plans, where different users have varying usage allowances. Rate limiting helps to keep users within the bounds of their tier.

Managing Resource Consumption

APIs often rely on limited resources like databases, storage, and compute. Rate limiting helps you control the consumption of these resources, preventing overuse and abuse to ensure continuous availability for all users.

Back-end Service Protection

Sometimes, your API might be a gateway to other critical back-end services. Rate limiting helps protect these services from being overwhelmed by excessive requests originating from your API.

Cost Control

For APIs that involve costs associated with usage (e.g., external service calls, data transfer), rate limiting can help manage and control those costs by preventing runaway consumption. For instance, let's say your API utilizes OpenAI's APIs for AI functionality; you don't want users to have free reign to consume as much as they would like. Instead, rate limiting allows you to control costs for services that are an expense to your API operation by limiting the amount of traffic at a given time. Side Note: We wrote a guide to rate limiting OpenAI requests in case you are running into the issue above.

In essence, rate limiting is a crucial tool for maintaining your API endpoint's stability, performance, and security. It's about ensuring a reliable and consistent user experience while protecting your infrastructure (and wallet) from abuse and overload.

How Does API Rate Limiting Work?

Alright, so how does it all work? Let's pop the hood and see how this rate limiting magic happens. At its core, rate limiting involves tracking a particular client's requests (identified by an IP address, API key, or user ID) within a defined time window.

Here's a simplified breakdown of the process:

- Request Received: When your API receives a request, the rate limiting system kicks in.

- Identify the Client: The system identifies the client making the request using their unique identifier (IP address, API key, etc.).

- Check Request History: The system checks the request history for that client within the configured time window. This might involve looking up records in a database, cache, or dedicated rate limiting service.

- Evaluate Against Limits: The current request count is compared against the defined rate limit.

- Allow or Block: The request is processed and passed through to the API if the client is within their allowed limit. If they exceed the limit, the request is rejected with a 429 Too Many Requests response.

Now, this is a very basic overview. In reality, there are various algorithms and techniques used for rate limiting, each with its own nuances and complexities. In the next section, we will take a look at the particulars of potential methods that can be used to calculate and enforce rate limiting.

Different Methods of Rate Limiting

As mentioned, at its core, rate limiting relies on algorithms and techniques to monitor and control the flow of requests to an API endpoint. Although there are quite a few to pick from, here are some of the most common ones implemented by rate limiting solutions and API gateways:

- Token Bucket: Imagine a bucket constantly filled with tokens at a fixed rate. Each API request consumes one token from the bucket. If the bucket is empty and the user has no request remaining, the request is rejected. This algorithm allows for some burstiness, as clients can accumulate tokens up to the bucket's capacity, but it also enforces a consistent average rate over time.

- Leaky Bucket: Similar to the token bucket algorithm, but instead of tokens, it's like a bucket with a hole at the bottom. Requests flow into the bucket, and they "leak" out at a constant rate. If the bucket overflows, new requests are rejected. The leaky bucket algorithm smooths out bursts of traffic and ensures a more consistent flow of requests.

- Fixed Window: This algorithm divides time into fixed windows (e.g., 1 minute). Each window has a defined limit for the number of requests allowed. Once a window ends, the counter resets. While the fixed window algorithm is simple to implement, it can lead to bursts of traffic at the beginning of each window.

- Sliding Window: A more sophisticated approach that combines aspects of fixed window and leaky bucket. The sliding window algorithm tracks requests within a sliding time window, allowing for more flexibility and smoother rate limiting.

- Sliding Log: This algorithm maintains a timestamped log of each request. To evaluate a new request, the system checks the log for requests made within the defined time period. This provides accurate tracking but can be resource-intensive for high-traffic APIs.

These are just a few examples, and there are other variations and hybrid approaches. The choice of algorithm depends on factors like the specific needs of your API, the desired level of granularity, and the available infrastructure. Beyond algorithms, rate limiting also involves various techniques for tracking requests. If you're using an API gateway, much of the time, you'll be able to select exactly how you want each request tracked. Here are a few ways that you may see request counts tracked:

- By IP Address: Identifying clients based on their IP address. This is simple but can be inaccurate if multiple users share the same IP (e.g., behind a NAT).

- By API Key: Using unique API keys to identify and track individual clients. This provides more accurate tracking and allows for differentiated rate limits.

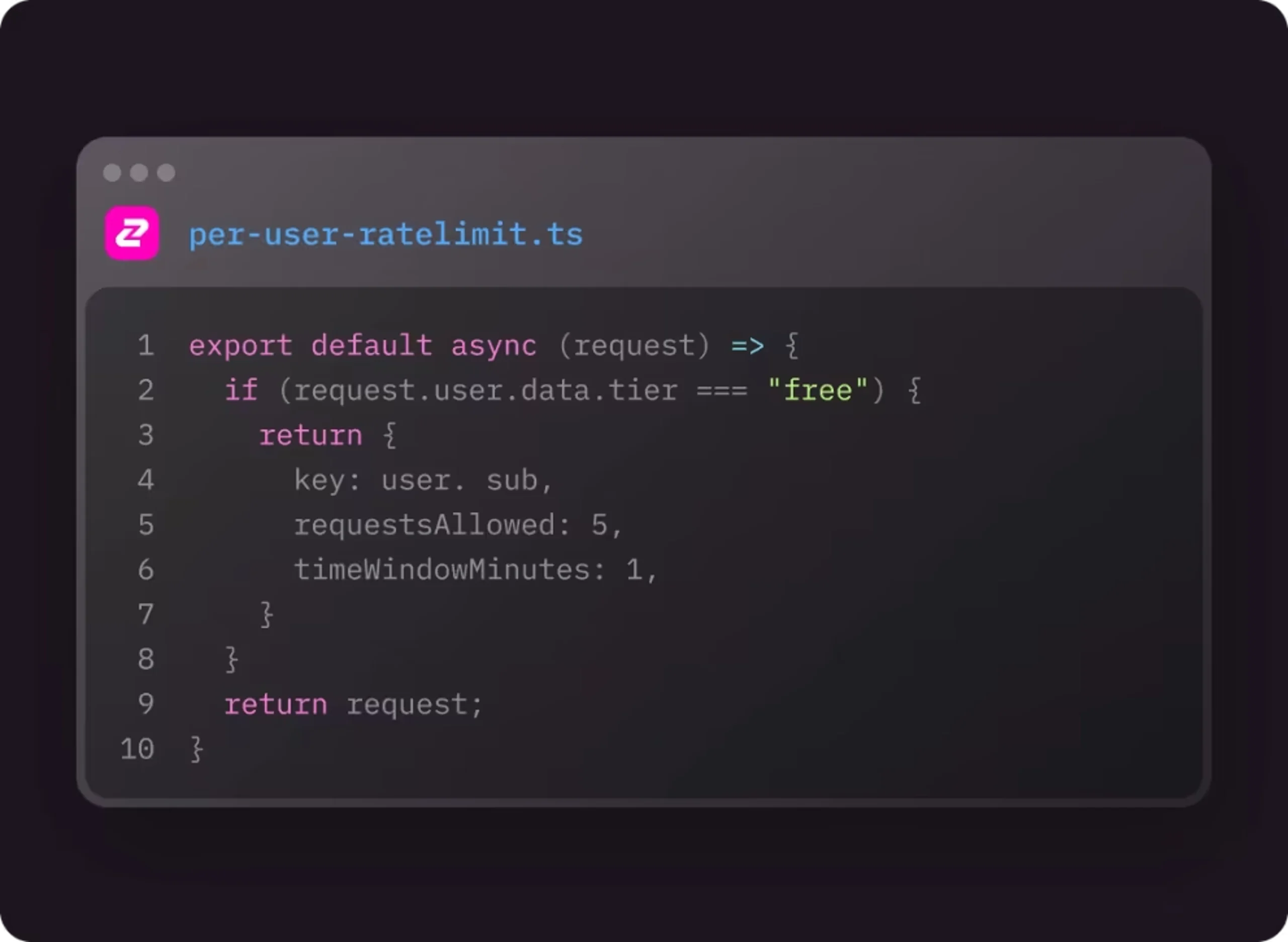

- By User ID: Tracking requests based on authenticated user IDs. This is useful for applications where users have individual accounts and usage quotas. Here's an example of per-user rate limiting for Supabase.

- Header-Based Tracking: Using custom HTTP headers to identify clients or track specific types of requests.

Combining both the algorithm and the various methods for tracking, you can implement the best fit for rate limiting for your particular use case. As you can see, there is a fair amount of flexibility in how it can be done.

Dynamic Rate Limiting

If your API is used by large enterprises, its common that you will issue Subaccount API Keys (subkeys) so simply rate limiting by a single key or user ID won't cut it. Instead, you will likely want to offer an organization-level rate limit (in addition to a per-key rate limit) that is shared across the company to enforce fair-usage across multiple enterprise customers. The problem is that you might not always have information about the organization at request time. This is where Dynamic Rate Limiting comes in - allowing you to read data from external sources at request time to determine what rate limit to apply. Check out our Supabase dynamic rate limiting example to see this in action.

Dynamic rate limiting is commonly used in APIs that offer subscription plans with different rate limits (ex. higher rate limits for more expensive plans) - allowing you to associate users with their plans at runtime.

Complex Rate Limiting

In some APIs, the number of requests does not actually translate well into the costs you bear as an API provider. Some use-cases include resource based APIs (ex. File upload/download) as well as many AI APIs which charge based on tokens. In this case, you want Complex Rate Limiting which gives you the ability to rate limit on whatever type of token or property you want (ex. LLM tokens, file size, etc.).

How Can Users Handle API Rate Limits?

It's likely that many of your users will run up against your new API rate limits after you impose them, especially if you had no traffic controls before this point. Expect to get support tickets from low-tech users asking what the error is and how they can get around it. Rather than relenting and increasing their rate limits, encourage the following best practices:

- Minimize Requests: Ensure that all of their API calls are actually necessary and they aren't fetching more data than they need.

- Batching Requests: Instead of making multiple individual calls to fetch different pieces of data, consider whether they can consolidate these requests into a single API call. For example, if they need to retrieve information about multiple users, offer them a batch API endpoint to fetch data for all users in one go.

- Caching Responses: Caching involves storing the results of previous API calls and reusing them when the same data is needed again. This avoids redundant requests and significantly reduces the load on the API server.

We cover these optimizations and other strategies for discussing rate limits with customers in our exceeding rate limits guide.

Additionally, you can also view this as an opportunity to upsell these customers, offering them higher rate limits as a part of an enterprise plan.

Rate Limiting vs. API Throttling

So, how does this all compare to API throttling? While often used interchangeably, rate limiting and API throttling are distinct concepts with subtle but significant differences. Although they both aim to manage API traffic, they have different approaches and goals.

As we've already seen, with rate limiting, when requests exceed their quota, they are simply stopped and rejected. If a request is sent through and stopped by a rate limiting policy, that request never makes it to the upstream and is essentially like it never even happened. On the flip side, with throttling, the request is queued if they have reached a rate limit and then executed when the limit is reset, or request allocation is once again available to that user. In short, instead of a hard stop, as we see with rate limit policies, throttled requests still go through eventually. This allows traffic flow to be controlled in a steady flow.

Let's break it down in table form so you can see these two approaches side-by-side:

| Feature | Rate Limiting | API Throttling |

|---|---|---|

| Purpose | Prevent abuse, ensure fairness | Maintain performance, prevent overload |

| Mechanism | Hard limits, immediate rejection | Dynamic rate adjustment, controlled flow |

| Impact on Client | Requests are blocked when the limit is reached | Requests delayed or processed at a slower rate |

Both rate limiting and throttling are valuable tools in your API management arsenal. Choosing the right approach depends on your specific needs and the goals you want to achieve when it comes to controlling API traffic.

What Are The Best Practices for API Rate Limiting?

Now, how should one go about implementing rate limiting? Adding a rate limit to your API effectively requires a thoughtful approach. You'll want to balance the benefits of your users with the benefits of your business. For an in-depth understanding, we recommend reading our 10 Best Practices for API Rate Limiting guide. In case you don't have time for that, let's look at a few best practices to help along the journey:

Define Clear and Consistent Limits

Establish clear and consistent rate limits across your API. Users should easily be able to find and understand rate limits, and businesses should avoid setting arbitrary limits. The limits should align with your API's capacity and expected usage patterns. Document these limits clearly in your API documentation so developers understand the constraints.

Provide Informative Error Messages

When a client exceeds their rate limit, return a clear and informative error message with the 429 Too Many Requests status code. Depending on who your API consumers are, you may want to include details like the current rate limit being applied, the time window, and when the client can retry their request.

Offer Granular Rate Limits

Consider implementing different rate limits for different endpoints, user groups, or subscription tiers. This allows you to fine-tune your rate limiting strategy and provide a more tailored experience for different users. Doing this can also expand revenue and improve developer experience. For instance, smaller hobbyists just playing around with your API might be happy with a low/no-cost tier with a low rate limit and enterprise customers may require a larger rate limit at a high price. Having flexibility and appropriate limits is a great way to open up revenue generation more widely.

Use Appropriate Time Windows

Choose time windows that align with your API's usage patterns and goals. Shorter time windows (e.g., seconds or minutes) prevent bursts, while longer windows (e.g., hours or days) are suitable for enforcing overall usage quotas. This really highlights the usefulness of combining both API rate limiting and quotas as a part of your overall traffic management solution.

Implement Rate Limiting Gradually

Start with generous rate limits and gradually tighten them as you gather data on usage patterns. This allows you to fine-tune your strategy without disrupting existing users. If you all of a sudden toss a rate limit onto your API, customers very quickly might be affected by varying levels of consequence. As we mentioned, when an API request is stopped by a rate limit, it is dropped. If developers have not factored this into their logic, the consequence on the consumer side might not be well received.

Monitor and Analyze Rate Limiting Data

Track key metrics like request volume, error rates, and latency to understand how your rate limiting is performing. This data can help you identify potential issues, optimize your limits, and detect abuse. How often are users hitting a rate limit? Are rate limits still too high to prevent performance issues? Collecting and analyzing these metrics are critical to ensuring your rate limit strategy is working for you and your users.

Choose the Right Algorithm

Remember, there are different algorithms and ways to apply rate limiting. Select the rate limiting algorithm that best suits your needs, looking at factors like granularity, burst handling capabilities, and performance implications of a particular approach.

Use an API Gateway

The easiest and most scalable way to implement rate limiting is to use an API gateway, like Zuplo. With a gateway, you can easily set and update API rate limits with a few clicks. For rate limits tied to monetization, platforms like Zuplo can apply rate limiting based on the tier that a user is subscribed to automatically. One-off rate limiting solutions are much more difficult to build and maintain compared to this out-of-the-box functionality that can be plugged in instantly.

Communicate Changes Clearly

Communication is probably one of the most important pieces of the puzzle. If you are adding rate limits or need to adjust them, communicate these changes clearly to your users in advance. Provide ample notice and explain the reasons for the changes to maintain transparency and trust.

If you follow these best practices, you should be well-covered when it comes to implementing and maintaining API rate limits. By balancing the benefits to you and your users and always making communication about rate limits easy to access and understand, things should roll out quite smoothly. Next, let's look at what API rate limiting looks like in action.

API Rate Limiting Examples

Rate limiting can be used to help with quite a few different use cases. It can be a primary mechanism, such as directly protecting a public API, or a secondary mechanism, such as a backup when front-end logic is insufficient. Let's look at three scenarios where it can come in handy:

Protecting a Public API

Imagine you have a public API that provides weather data. To prevent abuse and ensure fair access for all users, you might implement a rate limit of 10 requests per minute. To access the API, the user must use their API key that their requests will be tracked against, preventing any single user from overwhelming the API with excessive requests.

Managing API Usage for Different Subscription Tiers

You might implement tiered rate limits for an API with different subscription tiers (e.g., free, basic, premium). Free users might be limited to 50 requests per minute, while premium users get 500 requests per minute. From a business perspective, having these limits can help to push users to upgrade to higher tiers for increased access.

Preventing Brute-Force Attacks on a Login Endpoint

Let's say you have a login UI that has some front-end logic to stop users from attempting to log in after 5 attempts. As a secondary step, you can also enforce a rate limit at the API level to protect the underlying API from brute-force login attempts. For example, you might limit login attempts to 5 per minute per IP address. This helps prevent attackers from repeatedly trying to guess user credentials and running dictionary attacks and similar tactics.

How to Implement API Rate Limiting

Alright - we've covered most of the technical concepts for API rate limiting. If you've made it this far, you're likely convinced that implementing rate limits is important. Let's get our hands dirty now - here's what you need to know and how to implement it:

Business Consideration

If you're reading this guide - you may not actually be ready to implement rate limiting in your API. Beyond the technical details - there's many business consideration and pre-requisite data needed to ensure a good roll-out of rate limits. Here's some questions to ask yourself:

- What data do I need to configure my rate limits in the first place?

- What observability tools do I need to get that data?

- Should my rate limits be public or secret?

- Do I prefer low-latency or high-accuracy in my enforcement?

These are pretty tough questions! Luck for you, we provide insights on each of these in our subtle are of API rate limiting guide.

Developer Experience - How to Communicate API Rate Limits

Your customers are going to run into your rate limits eventually - and when they do you want to equip them with the right tools and information for them to handle the issue themselves. Here's the different levels of developer experience you can provide through communicating your rate limits:

Bronze Tier: 429 Response

Simply returning a 429 error response with no details is better than nothing - but doesn't leave your customer with many options.

Silver Tier: Problem Details Response

The Problem Details standard lets you to communicate more information to your customer in a standardized way. Here's an example:

You can include whatever information you want in the detail field, including

when the user should try again. Unfortunately this isn't a standard way of

communicating that information. For that, you need the retry-after header.

Gold Tier: Retry-After Header

RFC 7231 (which defined HTTP 1.1) included a

Retry After

header which is supposed to indicate how long the user ought to wait before

making a follow-up request. The value can either be an HTTP-date or a number in

seconds.

Here's an example for 1 hour:

and here's an example for a date:

The benefit of expressing this data in a header is that your code can parse this

value and adjust its behavior accordingly (ex. pause requests until the retry

after date has arrived, or the duration has elapsed). This can be a good or a

bad thing (as highlighted in the

subtle art of rate limiting)

depending on how much you trust the person calling your API. Communicating the

retry-after doesn't stop your customers from running into rate limits in the

first-place however. For that you need the Rate Limit Headers.

Platinum Tier: Rate Limit Headers

In an ideal world, a customer wouldn't just know that they hit a rate limit, they would know about the rate limits before hand and how many requests they had left before hitting them. The first attempt at this came a few years back in this draft which proposed the following headers.

- RateLimit-Limit: The applicable rate limits for the current request

- RateLimit-Remaining: How many requests the user can still make within the current time window

- RateLimit-Reset: The number of seconds until the rate limit resets

Here's an example

This is a big step up in communication to your customers. Enterprise-grade APIs

like Telnyx actually adopted

this standard to communicate their rate limits. That RateLimit-Limit header

can actually communicate even more information - you can even use it to disclose

the length of your window (in seconds):

These headers are pretty simple and handle request-based rate limiting cases

very well. But what about when you are rate limiting on something other than

requests (ie. Complex Rate Limiting we discussed earlier). You can also

repurpose RateLimit-Limit for that too!

In the example above, the customer can download up to 5000 kilobytes per hour and has already consumed 300 kb.

Unfortunately, this draft never became an official RFC - so although it is nice, we can't necessarily recommend implementing it. Instead - there is a new draft which defines a slightly different set of headers.

- RateLimit-Policy: Allows servers to advertise their quota policies, providing clients with detailed information about rate-limiting rules

- RateLimit: Communicates the current state of the rate limit, including remaining quota and reset time.

These two headers have a few components to them, best illustrated with an example:

- "burst" and "daily" are actually separate quotas expressed in one header, with those titles indicating what scenarios/time-periods they apply to

- the

qparam is the quota allocated - (not included)

quindicates the quota units; requests, content-bytes, or concurrent-requests - w is the time window of the quota in seconds

- (not included)

pkis the partition key the quota is allocated to the quota. This could be a user ID, hashed API key, or some other ID the server chooses to generate.

A corresponding RateLimit header could be

- "daily" matches up with the quotas expressed in the previous header

ris the remaining quota units lefttis the number of seconds until the quota resets- (not included)

pksame partition key as above

We'd definitely encourage you to read the referenced drafts before deciding to implement these headers (especially with the lack of tooling support) - but this information will likely be valuable to your customers.

Implementing Rate Limiting With Zuplo

We'll preface this by saying you don't need to use Zuplo to rate limit your API. We already have native guides for rate limiting in NodeJS and Python if you're building with those. We will say that rate limiting at the API layer at the service level instead of the gateway level is definitely limiting (no pun intended) - and that Zuplo has the best damn rate limiter on the planet. Read on if you'd like to see how easy it can be.

Zuplo makes it incredibly easy to add rate limiting to your APIs. Here's a quick tutorial on how to implement API rate limiting with Zuplo:

- Sign up for a free account if you don't already have one

- Add your APIs to Zuplo

- Navigate to one of your API routes in the Route Designer (Code > routes.oas.json),

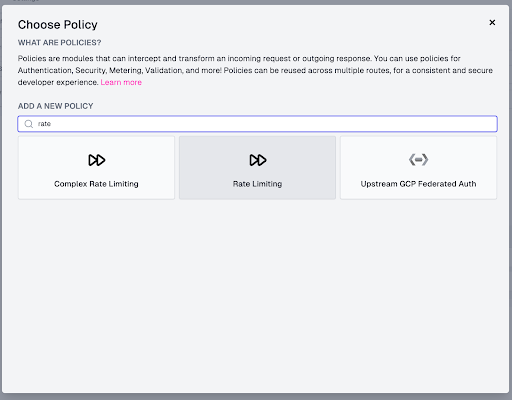

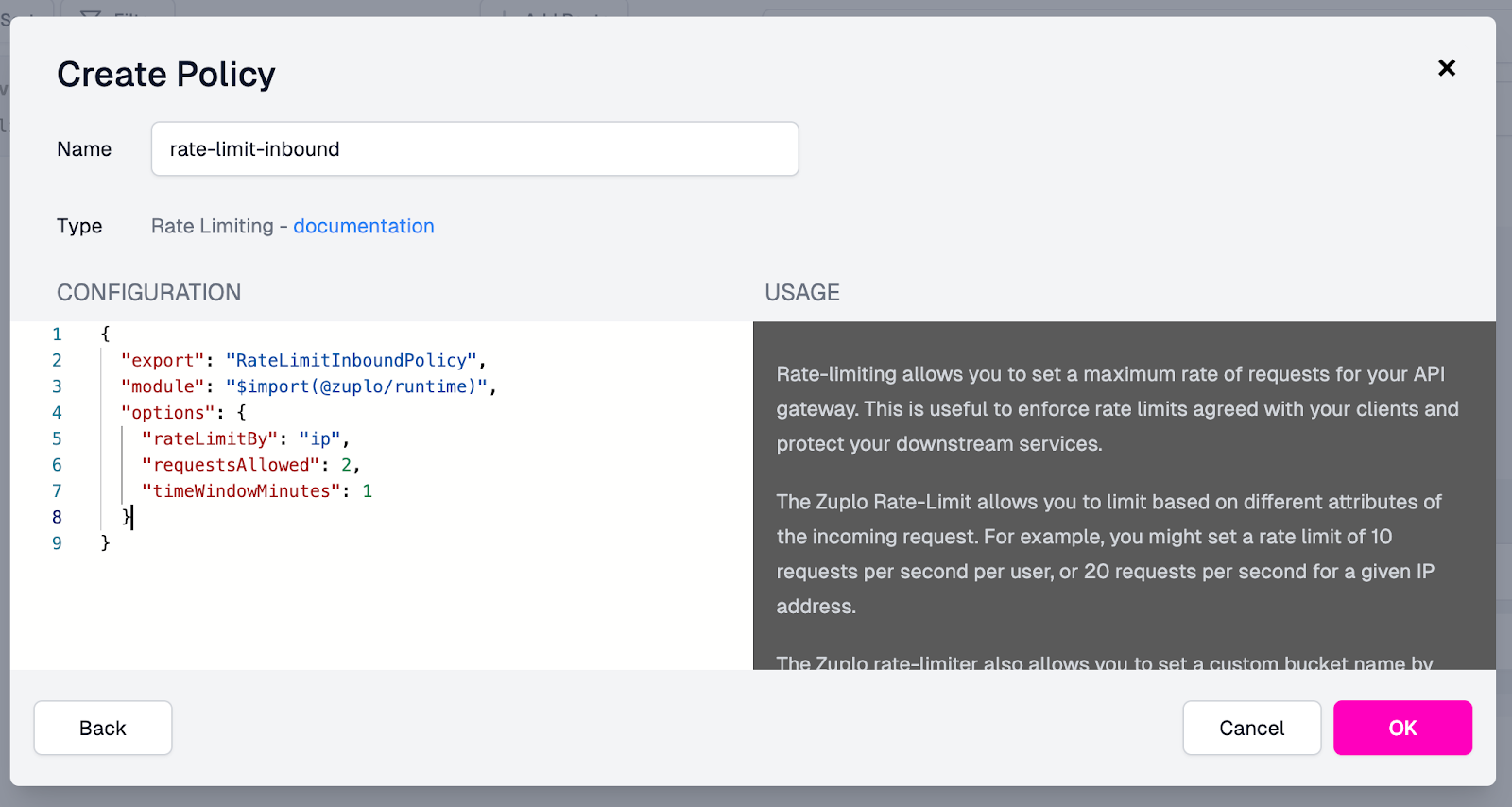

- Click the Policies dropdown, then click Add Policy on the request pipeline. Search for the rate limiting policy (not the "Complex" one) and click it.

By default, the policy will rate limit based on the caller's IP address (as

indicated by the rateLimitBy field). It will allow two requests

(requestsAllowed) every 1 minute (timeWindowMinutes).

You can explore the rest of the policy's documentation and configuration in the right panel.

-

To apply the policy, click OK. Then, save your changes to redeploy. At this point, your rate limit policy is live!

-

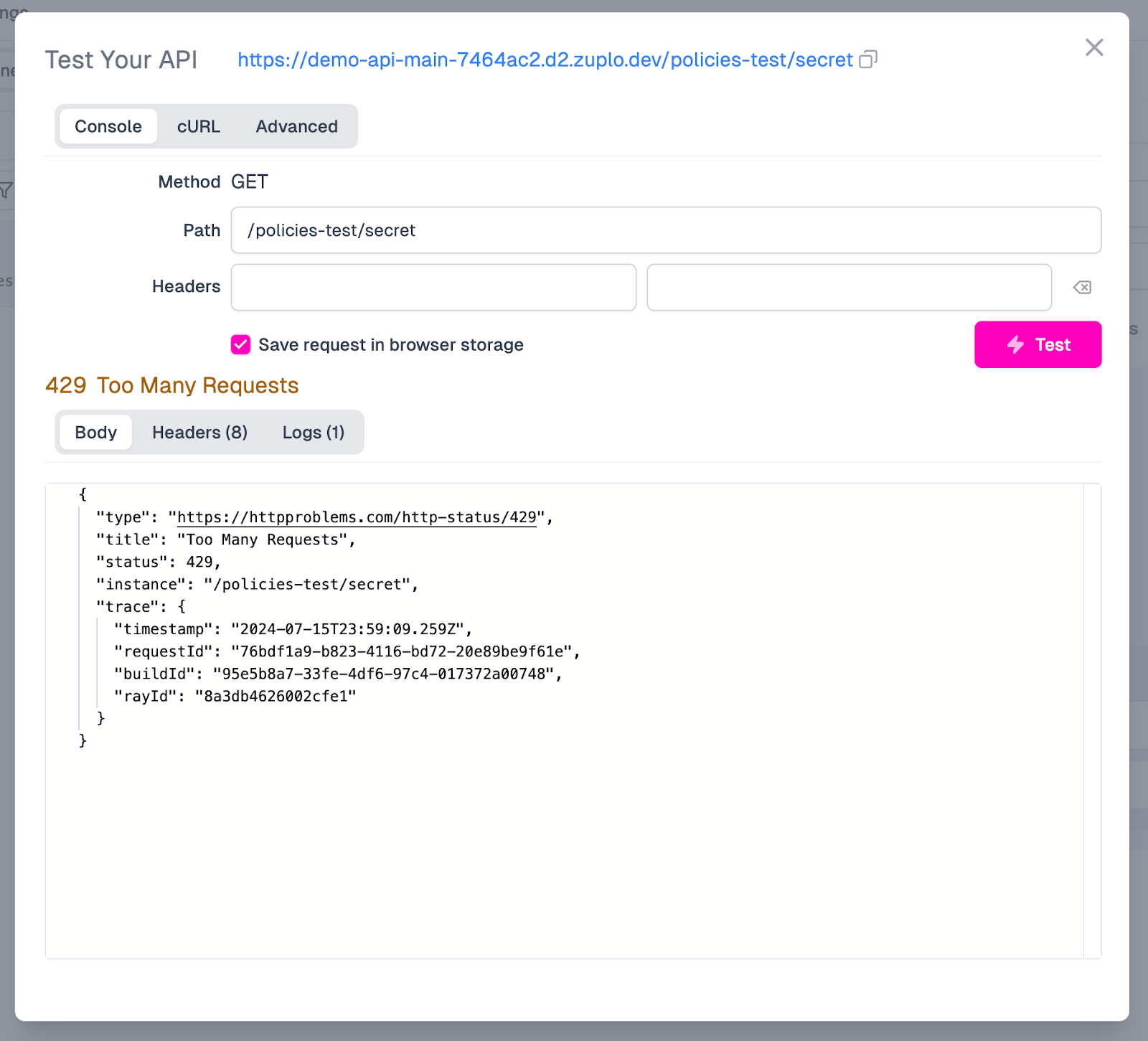

Now, try firing some requests against your API. You should receive a 429 Too many requests on your 3rd request.

Your rate limiting policy is now intercepting excess requests, protecting your API! That's it! Zuplo handles all the underlying complexity, allowing you to focus on building and deploying your API. You can customize your rate limiting policies by adjusting time windows, configuring custom error messages, and setting up different limits for different users or endpoints. For more complex use cases, you can also use Zuplo's Complex Rate Limiting policy, which offers even wider configurability.

Conclusion

Rate limiting is a critical piece of API management for API providers. Leaving your APIs without a rate limiting policy is insecure and can lead to abuse of endpoints. In the end, users will suffer from poor performance, and you may end up missing revenue. Following the best practices and examples in this guide, there's no reason not to implement rate limiting for your APIs!

Ready to take control of your API traffic and experience the power of rate limiting firsthand? Sign up for a free Zuplo account today and start adding rate limiting to your APIs in minutes. With a developer-focused UI and easy-to-implement API management policies, Zuplo makes it easier than ever to implement and manage rate limiting for your APIs. Don't let your API be vulnerable to abuse or overload. Take the first step towards more secure and performant APIs with Zuplo.