The OpenAI Apps SDK and

the new MCP Apps extension

are powerful ways for users to interface with AI systems and capabilities within

applications they are already using (like ChatGPT and Claude). The OpenAI Apps

SDK extends MCP by taking advantage of resources and tools in order to serve

UI components and agentic capabilities directly within a chat interface.

Zuplo's MCP Server Handler is a perfect fit for integrating with the OpenAI Apps SDK as it allows you to utilize your existing APIs and policies with a robust and powerful MCP server.

Let's build a simple app using OpenAI's Apps SDK using the Zuplo MCP Server Handler and take a deep look at how this works for MCP under the hood.

This is an advanced guide on building cutting edge MCP features: some of these semantics and interfaces are likely to change in the future. Be sure to read our getting started guide on MCP with Zuplo and the docs for building powerful MCP servers on Zuplo.

Sample API

First, let's start with a simple demo API. This is a stub weather API that takes latitude and longitude query parameters, returning the weather conditions for that location.

In config/routes.oas.json, add the following route inside the paths object:

Once deployed on Zuplo, we can hit this API using curl and it'll return a

payload of weather data:

Notice that we provide the lat and lon query parameters. These will be used

later as arguments to the MCP tool that is mapped to this endpoint.

For the purposes of this demo, the /weather API endpoint uses the

urlForwardHandler to forward requests to the demo https://weather.zuplo.io

backend that returns the same weather data every time. In a real production

setup, you would set the baseUrl to your actual weather API backend.

Learn more about the URL Forward Handler in our docs.

MCP Server

Next, we need to setup the MCP server to be able to utilize our /weather

endpoint and expose it as a tool. On a new /mcp endpoint, we can build a

POST route that uses the mcpServerHandler. This automatically bootstraps a

full MCP server with JSON schema verifiable tools.

In config/routes.oas.json, add the following route inside the paths object:

Let's look more closely at the options we provided the MCP server handler:

nameis the name of the MCP server. This is shown to MCP clients when they initialize with the server.versionis the version of the MCP server itself. This is also shown to MCP clients during initialization.includeStructuredContentadds astructuredContentobject during tool calls. This is required by the OpenAI Apps SDK in order to populatewindow.openaidata with tool call results for the UI widgets to utilize.operationsis a list of objects with file and operation ID association. These mapped OpenAPI operations are then transformed into MCP entities (liketoolsandresources) as defined by the operation'sx-zuplo-route.mcpconfiguration. By default, operations are assumed to be tools and get several sane defaults. Since we are adding thegetWeatheroperation, we can utilize it as a tool immediately.

Learn more about the configuration options for the mcpServerHandler

in the Zuplo docs.

We can list the tools on this server by calling the tools/list MCP method:

Note that we are making the POST request to the /mcp endpoint with the hand

crafted JSON RPC 2.0 payload: since Zuplo builds a stateless, HTTP streamable

MCP server, every request to the server by a client will be a POST. The

results show us the tools on the server by name and include the inputSchema

derived for each tool based on the input parameters of the OpenAPI route for

that mapped tool:

In this case, we have a mapping on lat and lon properties that are required

by the input schema. We can call this tool by providing the name of the tool

alongside the required arguments (again, as defined by the input schema):

Notice that we provide the query params as arguments in a queryParams object

in the JSON RPC message. Argument serialization and verification is all taken

care of by the MCP server handler as it automatically transforms the OpenAPI

spec into an MCP callable tool with the appropriate inputSchema. Also notice

that we get back the structuredContent object: again, this is required by the

OpenAI Apps SDK in order to populate the global window.openai object with the

tool call results. It is also worth noting that all of this is more or less

handled by the inner workings of MCP client and server communication: it's

useful to understand what's going on within the protocol itself as this informs

how MCP apps are crafted and how they work.

Build a UI template resource

When calling tools in apps, the OpenAI Apps SDK expects some sort of UI template that it can display in its HTML sandbox. These bundles of tools and widgets make up the full, end to end user experience within the chat. Think of it as dynamic UI generation: the MCP resource renders HTML based on the tool's output, which the chat client then displays in its sandbox.

Let's stub out another simple API endpoint that we can use as a resource in

our MCP server that will render the HTML we need.

In config/routes.oas.json, add the following route inside the paths object:

You'll also need to add this operation to your MCP server's operations array

in the /mcp route configuration:

x-zuplo-route.mcp is a very important chunk of OpenAPI JSON, so let's take a

bit of time to understand exactly what's going on:

- The

x-zuplo-route.mcpobject on the route defines MCP specific metadata for the entities (resources, tools, etc.). In MCP, resources are typically read-only objects like files on a filesystem or blobs in a bucket. In this case, we're providing some HTML that will accompany the tool in order to provide a UI for the end user. While we provide some sane defaults (like utilizing the OpenAPIoperationIdanddescription), it's important to use AI-specific names and descriptions, especially if your OpenAPI descriptions are generic or intended for non-agentic audiences. You can think of this object as the place where you can perform "prompt engineering" or "context engineering" in order to fine tune the metadata and get the most value out of your MCP tools and resources. In the case of the OpenAI Apps SDK, this is the name and description of theresourcethe chat agent will use when it executes a specific tool from the MCP server. typeis the type of MCP entity this route will be: by default, this is a tool. In this case, we're setting this as aresource.nameis the name of theresourcethat the AI agent or system will see when it performs aresources/list: this name is very important as it is one of the main ways to "communicate" to the downstream agent exactly what this entity is. In our case, we want to communicate that this is the widget for the weather tool.descriptionis the long-form description of theresource. Along with thenamefield, this is the main way the AI agent "understands" exactly what thisresourceis.uriis the "location" of the resource. Historically, this would have been a literal filepath or link to a bucket but for the OpenAI Apps SDK, we set this URI to a non-existent, "virtual"ui://path which can then be referenced later using this exact path. This field will be linked to a tool in metadata, so note theuriyou give it carefully!mimeTypeis an optional field that defines what "type" theresourceis. Importantly, for the OpenAI Apps SDK, this must be set totext/html+skybridgein order to display it in the HTML sandbox within the chat._metais the free object field that is used during publishing to set the domain and other important metadata for the resource.

Now, let's briefly look at the TypeScript module that is used as a resource in

order to render the widget.

Create the file modules/widget.ts with the following content:

This simply returns the literal text/html of our widget including the HTML

components, the styling, and the scripts. In a real production setting, you may

choose to build this on the gateway like in this example, pull the documents

from a bucket, or do more robust rendering or template in your backends.

In this example, you'll notice a special, globally exposed window.openai

object alongside a openai:set_globals event listener. While designing robust

UIs for the OpenAI Apps SDK is beyond the scope of this post, understanding

these pieces and how they relate to your user's experience is important. The

data in window.openai.toolOutput will carry the structured content of the tool

call associated with this widget and can be used to populate the UI. This is the

data that is presented to our sandboxed widget from the tool in order to display

the weather (i.e., the temperature, condition, and humidity).

Read more about building robust UI components and the global window.openai

object available to UI components on

the OpenAI docs site.

Next, let's wire up the tool to integrate with our widget UI!

Connect with the tool

In order to take advantage of the OpenAI Apps SDK displaying widgets, we need to

populate some _meta into our tool: let's go back to the /weather route and

do this directly in the x-zuplo-route.mcp property.

In config/routes.oas.json, update the /weather route's x-zuplo-route

object to include the mcp property:

Like the data on the widget's resource, the x-zuplo-route.mcp data on the

tool for this route is just as important:

typeis the type of MCP entity this route will be: by default, this is a tool.nameis the tool name that the AI agent or system will see: again, this is very important to help the agent "understand" what invoking this tool and its associated widget will produce. Here, we give the tool the nameget_weatherin order to differentiate it from thegetWeatheroperation ID in our OpenAPI doc. From my personal experience, extremely clear and structured tool names with underscores likesome_tool_nameare better for the agents understanding and success rate. This often does not align with what organizations have defined as anoperationIdin their OpenAPI doc so I encourage you to take advantage of this field and provide a unique, agentic focused tool name. Again, I cannot stress how important a clear tool name is!descriptionis the long-form description of the MCP tool: again, this is used by the LLM to "understand" exactly what this tool is and what it's for. This will drastically inform when the AI chat agent calls this tool and displays this tool's widget.annotationsare optional MCP specific annotations. In this case, we provide areadOnlyHintin order to inform the MCP client and agent that this tool does not mutate anything and is "read only" by nature. There are lots of supported annotations that you may choose to use._metais an optional "free" object where we can provide any metadata. In this case, we must use it to provide the OpenAI Apps SDK specific metadata:openai/toolInvocation/invokingis the message the chat agent will display when invoking the tool.openai/toolInvocation/invokedis the message the chat agent will display when the tool has been invoked.openai/outputTemplateis the URI of theresourcethat will be displayed to the end user. This is what connects thistoolwith theresourcethat holds our widget as a sort of "bundle" to be utilized in the chat interface. Again, this is what links the HTML widget we built as aresourcewith the actualtool!

Testing

Once we deploy all of this to a Zuplo gateway, we have a working MCP server

behind https that can execute our tool (which calls the /weather API) and

serves a small UI component to display in the chat interface's sandbox.

In order to test this:

- Go to

https://chatgpt.com> Settings > Apps > Advanced Settings - Ensure that you have "Developer mode" enabled

- Select the "Create app" button

- Enter the details of your App including the

/mcpendpoint for the server

Test the app in the chat interface by selecting it and enabling it from the "More" menu in the chat interface.

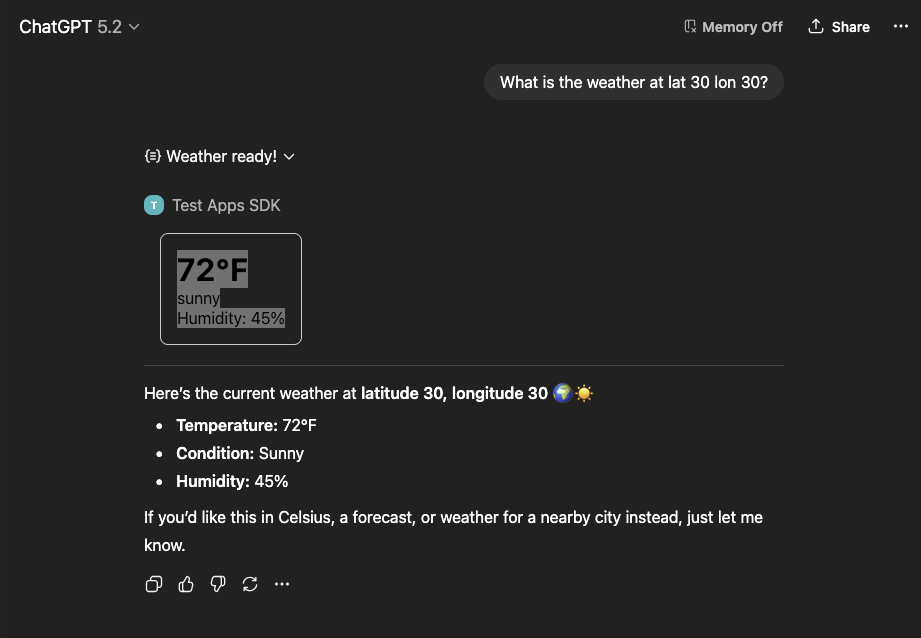

Here we can see my "Test Apps SDK" App is being used, it's displaying my widget,

it's called the get_weather tool which returned our stub weather data. The

widget with its hook then displays this data!

Advanced

In some cases, you may need to intercept the call to your API with a "wrapper" module. This may be necessary to set specific headers, invoke other ancillary APIs, or intercept the raw tool call request.

You can accomplish this through the ZuploMcpSdk in the @zuplo/runtime

package in a separate module. Then, set this new module as the tool that

interfaces with the Apps SDK client.

In the following wrapper example, we use the Zuplo MCP SDK to access the

incoming MCP request _meta manually in order to get a special field and use it

in a POST body to another route on the gateway using the

context.invokeRoute method.

Create a wrapper module in modules/weather-wrapper.ts:

This is especially useful when using _meta for data exclusively for the

widget: i.e., data that exists out of band of the actual LLM context window or

LLM generated tool arguments. Using ZuploMcpSdk gives you full unbridled

access to the original MCP request.

Learn more about using the ZuploMcpSdk on the docs site

including getRawCallToolRequest and setRawCallToolResult.

Next Steps

When actually submitting your OpenAI App for review, there are a few additional

_meta fields you'll need to configure on your widget resource including

openai/widgetCSP

and

openai/widgetDomain.

Further, there are

several advanced capabilities in the window.openai object

that make building robust UIs easier with more powerful user interactions.

Soon, Model Context Protocol will adopt "MCP Apps" as an official MCP extension. This official extension will be informed largely on the basis of how OpenAI built the Apps SDK. While it will look very similar to the OpenAI Apps SDK, keep an eye out for an official standard to be available in order to make your apps available in more chat clients!

Good luck and happy MCP-ing!