Note - this sample uses Policies, read this guide first.

In this sample, we'll show how you can archive the text body of incoming requests to Azure Blob Storage. We also have a post on Archiving to AWS S3 Storage.

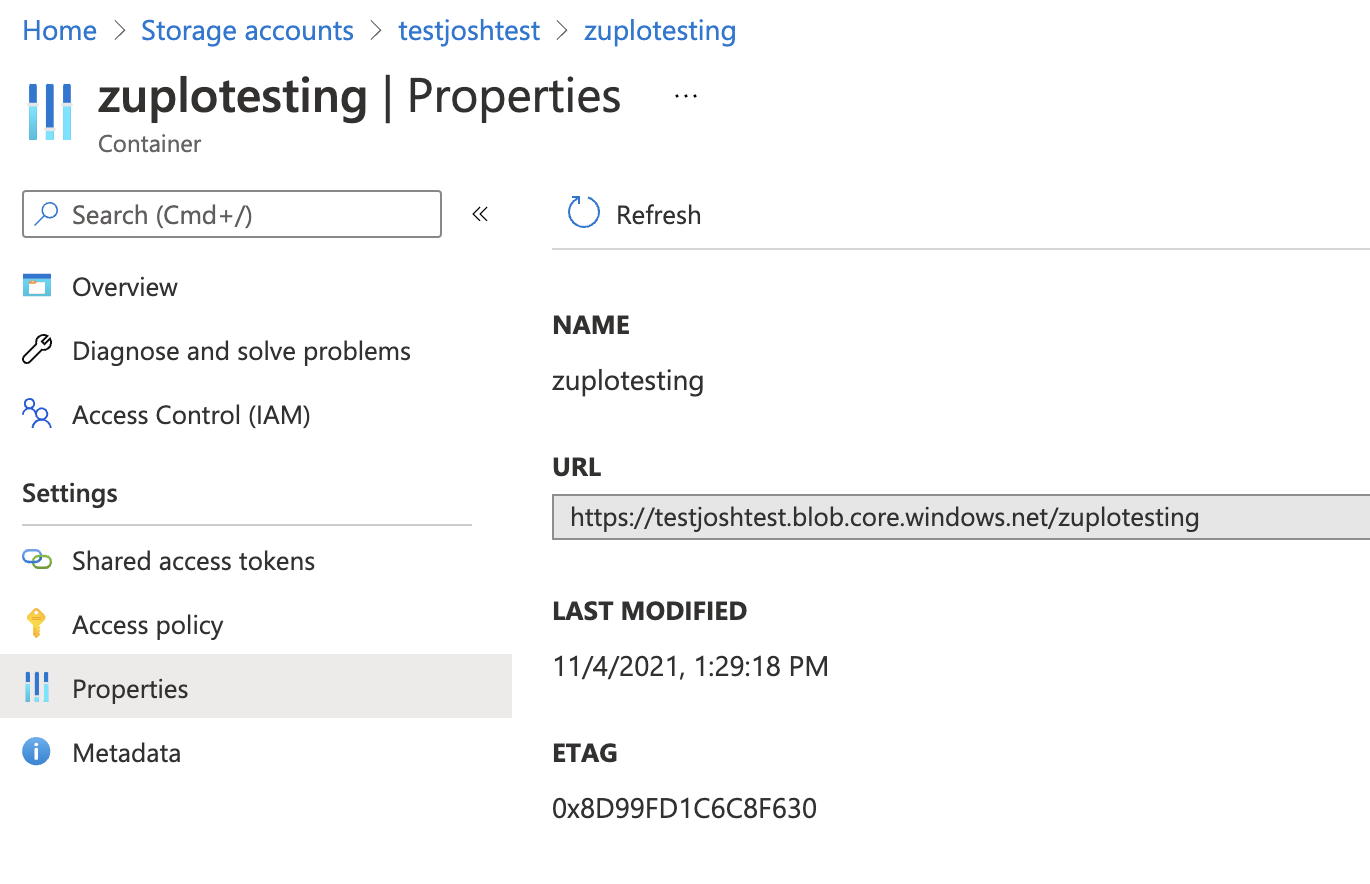

First, let's set up Azure. You'll need a container in Azure storage (docs). Once you have your container you'll need the URL - click the Properties tab of your container as shown below.

This URL will be the blobPath in our policy options.

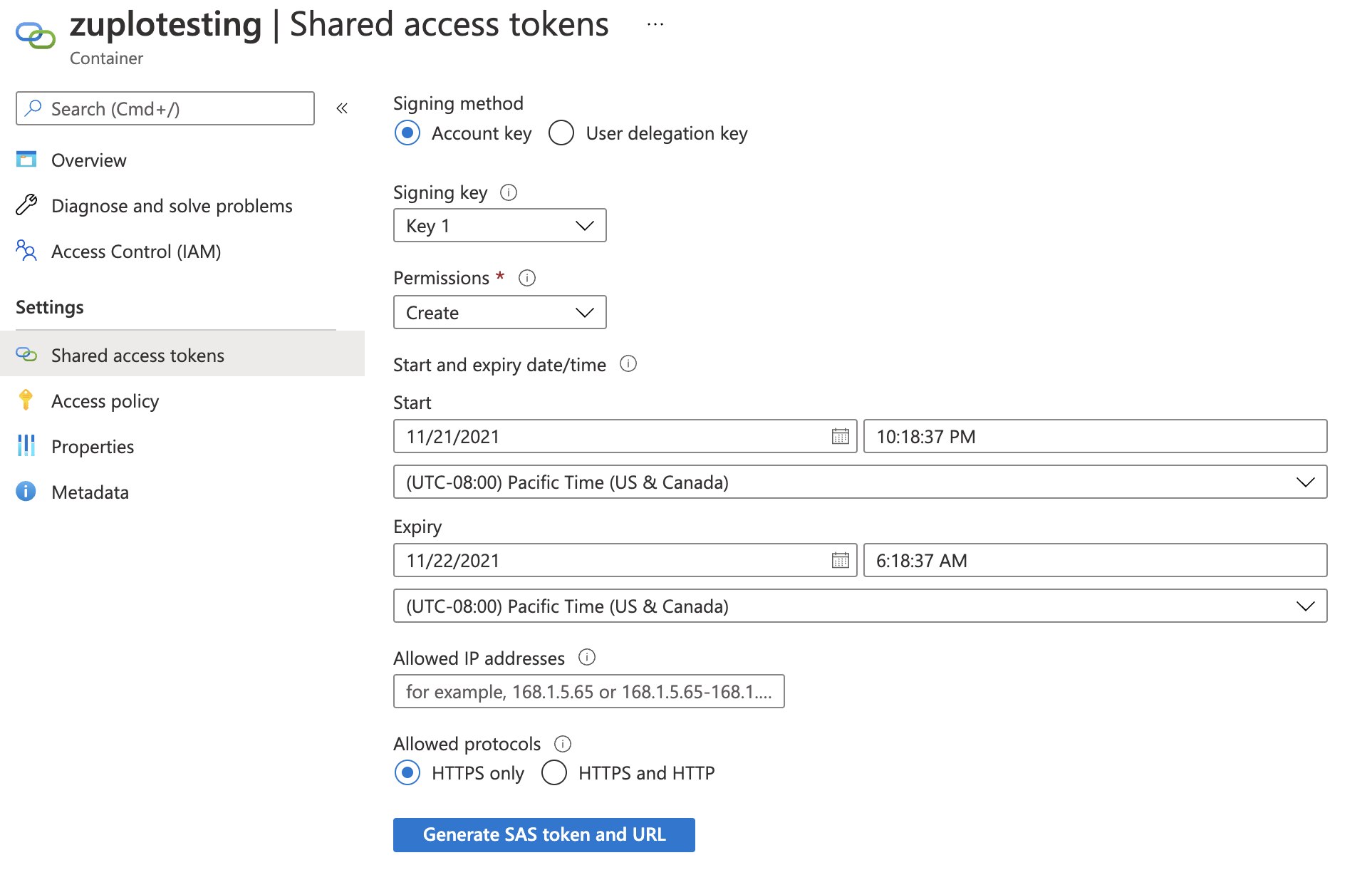

Next, we'll need a SAS (Shared Access Secret) to authenticate with Azure. You

can generate one of these on the Shared access tokens tab.

Note, you should minimize the permissions - and select only the Create

permission. Choose a sensible start and expiration time for your token. Note, we

don't recommend restricting IP addresses because Zuplo runs at the edge in over

200 data-centers world-wide.

Then generate your SAS token - copy the token (not the URL) to the clipboard and

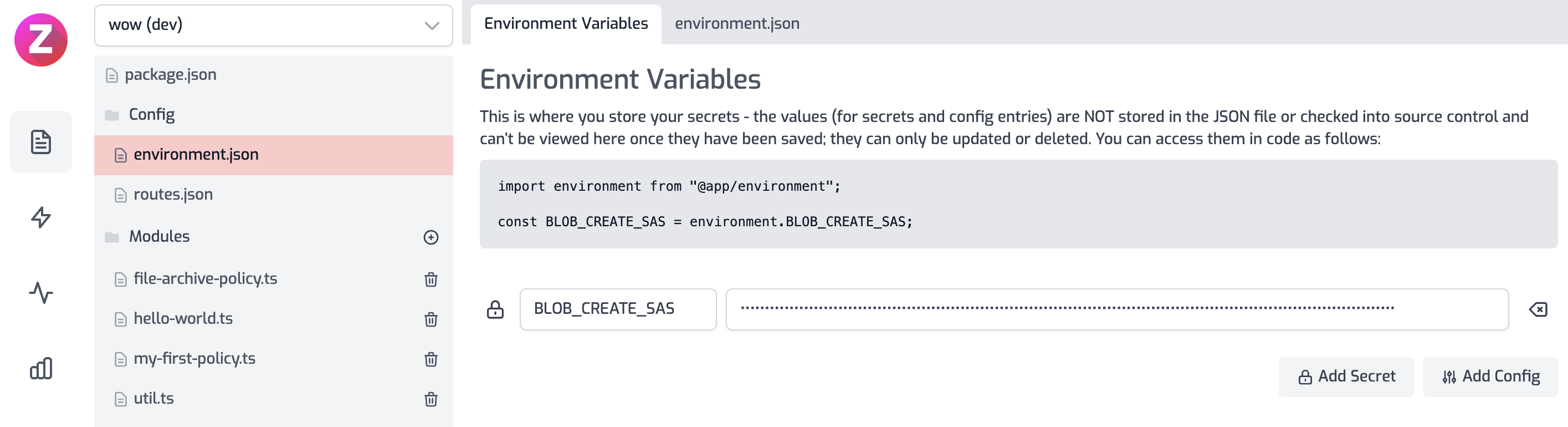

enter it into a new environment variable in your API called BLOB_CREATE_SAS.

You'll need another environment variable called BLOB_CONTAINER_PATH.

Note - production customers should talk to a Zuplo representative to get help managing their secure keys.

We'll write a policy called request-archive-policy that can be used on all

routes.

file-archive-policy.ts

Finally, you need to configure your policies.json file to include the policy, example below:

Code

Don't forget to reference the file-archive-policy in the policies.inbound

property of your routes.

Here's the policy in action: