The Gemini API serves as Google's gateway to sophisticated artificial intelligence capabilities. This guide explores how developers can integrate Gemini 2.0's advanced features into their applications, positioning it as a leading contender in today's AI landscape. Recent updates have doubled output speeds while reducing latency by two-thirds, particularly evident in the Gemini 1.5 Flash model's improved token output rates.

With expanded rate limits supporting up to 2,000 requests per minute for paid tiers and configurable safety filters providing greater implementation control, Gemini 2.0 offers developers powerful tools for building smarter, more responsive applications across various use cases.

Gemini 2.0 Models Overview#

Google's Gemini 2.0 family represents a significant advancement in AI capabilities, with various models designed for different use cases and performance requirements.

Key Models in the Lineup#

- Gemini-1.5-Pro-002: Google's most powerful production model for complex reasoning tasks and sophisticated content generation, now with over 50% price reduction for both input and output tokens.

- Gemini-1.5-Flash-002: A lightweight, efficient model balancing performance with speed, ideal for applications requiring quick responses and high throughput.

- Gemini-1.5-Flash-8B-Exp-0924: An experimental model featuring significant enhancements for both text and multimodal applications.

- Gemini 1.0 Pro: Previous-generation model offering strong language capabilities at a lower price point.

Primary Use Cases#

Gemini models excel across numerous applications:

- Text generation and analysis

- Multimodal understanding (processing both text and visual inputs)

- Code generation and analysis

- Conversational AI

- Enterprise applications via Google Cloud's Vertex AI

The recent improvements in rate limits (2x higher for 1.5 Flash and nearly 3x higher for 1.5 Pro) expand potential use cases by allowing more frequent model calls and improved application responsiveness.

Step-by-Step Implementation Guide#

Getting Started with Gemini 2.0 API#

- Obtain an API Key

- Sign up for Google Cloud Platform

- Create a project and enable the Gemini API

- Generate your API key

- Install the SDK

- Choose your preferred programming language

- Install the corresponding SDK

We actually have a demo of this in our Firestore/Firebase API tutorial, so check that out.

- Set Up Authentication

- Configure your API key securely

- Make API Requests

- Specify your preferred model (e.g., gemini-1.5-flash)

- Create clear prompts

Python Implementation:

import google.generativeai as genai

genai.configure(api_key="YOUR_API_KEY")

model = genai.GenerativeModel("gemini-1.5-flash")

response = model.generate_content("Explain how AI works")

print(response.text)Node.js Implementation:

const { GoogleGenerativeAI } = require("@google/generative-ai");

const genAI = new GoogleGenerativeAI("YOUR_API_KEY");

const model = genAI.getGenerativeModel({ model: "gemini-1.5-flash" });

const prompt = "Explain how AI works";

const result = await model.generateContent(prompt);

console.log(result.response.text());Ensure that your environment has proper Node.js module support to execute the code effectively.

Tutorial: How to Integrate LLM APIs#

Most LLM APIs follow a similar format and use nearly identical SDKs. Check out this tutorial on how to build an integration with the Groq API to see how its done:

Framework Integration Strategies#

Implementing Gemini 2.0 into your applications often requires custom API integration strategies to fit specific architectural needs.

For established frameworks, adapt the implementation to fit your architecture:

- Django/Flask: Create service layers for secure API querying and use settings to store keys securely

- Express.js: Implement middleware for handling authentication and requests

- Docker/Kubernetes: Store API keys as secure environment variables or secret.

For framework-specific examples, leverage the open-source SDK repositories available for Python, JavaScript, and other supported languages.

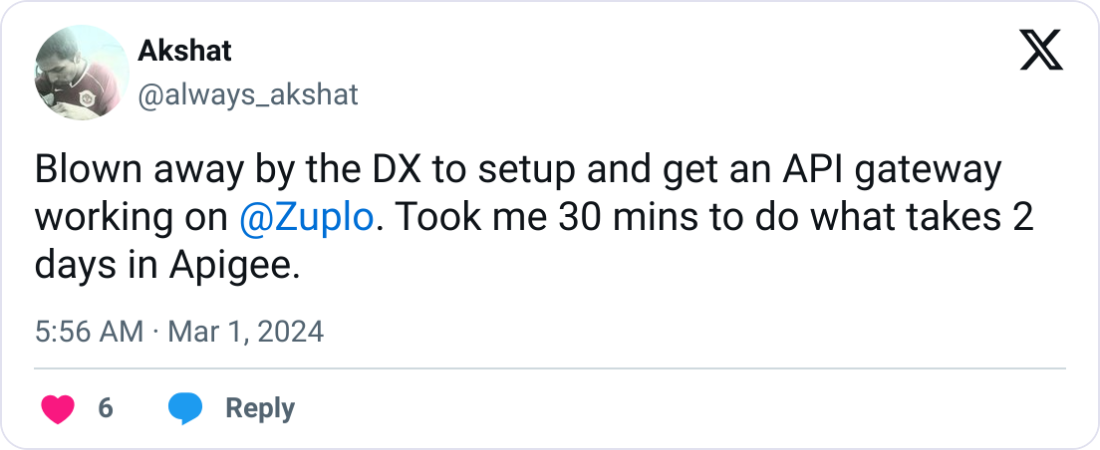

Over 10,000 developers trust Zuplo to secure, document, and monetize their APIs

Learn MoreOptimization for Quality and Cost#

Parameter Tuning Strategies#

Fine-tune these key parameters for optimal results:

- Temperature: (0.0-1.0) Controls randomness; lower values produce more deterministic outputs

- Top-P Sampling: (0.0-1.0) Balances diversity and quality in generated text

- Candidate Count: (1-8) Generates multiple response options

- Maximum Output Tokens: Set appropriate length limits for your use case

- Safety Filters: Configure based on your specific requirements

Cost Management Techniques#

- Leverage Recent Price Reductions: 50%+ decrease for Gemini 1.5 Pro model

- Use Higher Rate Limits: 2,000 RPM for Flash, 1,000 RPM for Pro

- Implement Batch Processing: Combine tasks to reduce overall API calls

- Add Client-Side Caching: Eliminate redundant requests by caching API responses effectively

- Monitor Usage: Track consumption patterns to optimize spending; using Google Cloud logging can help monitor your API usage efficiently

Advanced Techniques#

Multi-Model Integration#

Gemini 2.0's multimodal capabilities allow your applications to:

- Process text, images, and video within the same context

- Generate insights connecting visual and textual elements

- Create comprehensive solutions without switching between specialized models

Implementation returns structured JSON responses:

{

"candidates": [

{

"content": {

"parts": [

{

"text": "AI, or artificial intelligence, refers to..."

}

]

}

}

]

}Context-Based Reasoning#

Gemini 2.0 excels at sophisticated context handling:

- Understanding complex multi-parameter instructions

- Maintaining context across extended interactions

- Applying logical reasoning to solve problems

This enables applications like:

- Team collaboration environments with customized AI instructions

- Dashboard monitoring systems tracking AI interactions

- Context-aware automation adapting to different business scenarios

Exploring Alternatives#

While Gemini offers powerful capabilities, consider these alternatives based on your specific needs:

- OpenAI GPT-4: Leading competitor with strong reasoning capabilities but different pricing structure and context limitations.

- Anthropic Claude: Focuses on helpfulness, harmlessness, and honesty with impressive conversational abilities.

- Meta Llama 3: Open-source model that can be deployed locally or accessed via API, giving greater flexibility for private implementations.

- Cohere Command: Specialized for enterprise and business applications with strong document processing features.

- Mistral AI: Emerging European alternative with competitive performance and flexible deployment options.

Consider how you might leverage these platforms for processing proprietary data to generate revenue by using API gateways for revenue. Additionally, for strategies on monetizing AI APIs, consider exploring different models and platforms.

Each alternative offers different strengths in terms of capabilities, pricing, privacy features, and deployment options. Consider factors like context window size, multimodal abilities, and rate limits when selecting the most appropriate solution for your project.

Troubleshooting Common Challenges#

API Error Codes and Solutions#

| HTTP Code | Status | Solution |

|---|---|---|

| 400 | INVALID_ARGUMENT | Check request format against API reference |

| 403 | PERMISSION_DENIED | Verify API key permissions |

| 429 | RESOURCE_EXHAUSTED | Stay within rate limits or request an increase |

| 500 | INTERNAL | Consider reducing context size or switching models |

Performance Optimization Strategies#

- Rate Limit Management: Implement exponential backoff for 429 errors

- SDK Configuration: Use latest SDK versions with appropriate timeouts

- Safety Filters: Review settings if experiencing unexpected rejections

- Large-Scale Applications: Implement caching and batch processing

For detailed error information, refer to the Gemini API Troubleshooting Guide.

Harnessing Gemini's Full Potential#

As you develop with Gemini 2.0, continuous improvement is key to maximizing results:

- Collect User Feedback: Implement in-app forms, conduct user interviews, and analyze support requests

- Run A/B Tests: Compare prompt structures, UI designs, and instruction sets

- Monitor Analytics: Track API call patterns, response times, and error rates

- Optimize Resources: Structure queries efficiently and batch requests where appropriate

Additionally, employing effective API marketing strategies can enhance user engagement and market reach.

The Gemini 2.0 API offers powerful capabilities for AI development across content creation, customer support, data analysis, and more. Google's ongoing enhancements to performance, rate limits, and multimodal capabilities continue to strengthen its position in the AI landscape.

Whether you're building customer-facing applications or internal tools, understanding the hosted API gateway benefits can help you make informed decisions about managing your APIs more effectively. Consider implementing a robust API management solution to handle authentication, monitoring, and rate limiting efficiently. Zuplo's API Gateway offers comprehensive features for securing and optimizing your Gemini API implementation, ensuring both reliability and performance as you scale.