APIs are the lifeblood of modern software, and when throughput suffers, it’s a downhill slide for performance and user satisfaction. Latency often gets the blame, and for good reason—it’s typically caused by network delays or inefficient data processing. But then there can be code inefficiencies, like those clunky algorithms or over-complicated calculations that suck up valuable processing time. And, of course, if resources like CPU, memory, or bandwidth fall short, your performance can quickly hit a wall.

The good news? By staying proactive with optimization and keeping an eye on your API’s performance, you can catch issues before they balloon out of control. That means fewer slowdowns, smoother service, and happier users. Today, we’ll talk about eight key strategies to help you keep your API throughput running at its best. Let’s dive in:

1. Optimize API Endpoints for Speed

Speedy endpoints are the foundation of a well-performing API. You'll see noticeable improvements in both throughput and response times by cutting out unnecessary calls, shrinking payloads, and streamlining routing. Here’s how you can tackle it:

Reduce Unnecessary API Calls

Grouping multiple operations into a single request can save a lot of overhead. Fewer trips to the server = better performance. If you haven’t done a performance audit in a while, you might be surprised by how many redundant API calls you’re making. Getting rid of those could shave a significant amount off your response times.

But it’s not just about cutting calls. You can combine data retrieval tasks into

single, composite requests. For example, instead of making separate calls for

user details and their preferences, you could bundle them together in one API

call, which reduces the overall network load.

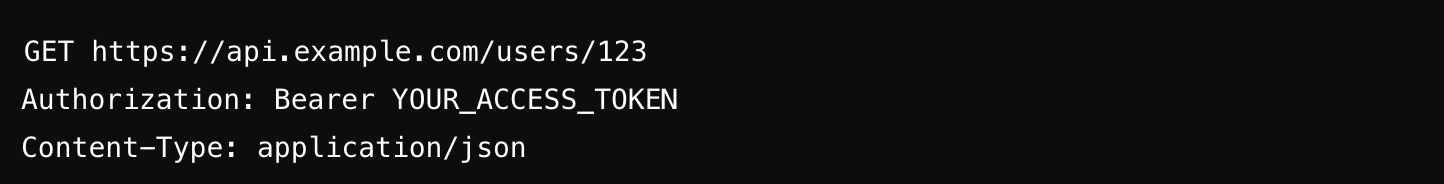

Optimize Data Returned

Don’t return more data than necessary. Large payloads = slow response times and increased bandwidth use. It’s also important to evaluate the data format used—JSON, for example, is typically lighter than XML, and some newer formats like Protocol Buffers or Avro could reduce payload sizes even further.

Minimize Latency with Efficient Routing

Routing efficiency is key. The fewer hops your request has to make, the faster it will get to its destination. Services like AWS Elastic Load Balancers and alternatives can help you distribute traffic without creating bottlenecks, ensuring that latency stays low even under heavier loads.

Optimizing routing isn’t just about choosing the shortest path, but also about smart routing based on the least-loaded resources. Dynamic load balancing can give you an edge by ensuring that the quickest available route is used at any given time.

2. Leverage Caching to Improve Performance

Caching is like your API’s secret weapon. By using a Cache API, you can store frequently requested data closer to where it’s needed, slashing response times and freeing up resources for other requests. Here's a deeper look at caching:

Implement Server-Side Caching

By caching data on the server side, using tools like ZoneCache, you can cut down on unnecessary database calls for data that doesn’t change often, like product listings, daily statistics, or even caching OpenAI API responses. Using cache-control headers like ETag or Last-Modified and applying an effective caching policy ensures that cached data stays fresh.

Use Client-Side Caching

With smart caching strategies in place, browsers can serve data from cache rather than making another request to the server. This allows clients to store responses locally, reducing the need to hit the server for data they’ve already requested. This reduces server load and improves performance, especially on repeat visits.

Utilize a Content Delivery Network (CDN)

CDNs are game-changers, especially when you’re dealing with static assets (like images or stylesheets). They cache content at multiple locations worldwide so that users can pull data from the nearest server. This reduces latency and frees up your infrastructure for more important tasks.

CDNs can dramatically boost performance

for high-traffic applications, so don’t sleep on them. Plus, CDNs often come

with built-in security features, which can protect against DDoS attacks by

distributing the traffic load.

.png)

3. Use Asynchronous Processing for Long-Running Tasks

Let’s face it: long-running tasks are the enemy of fast APIs. But there’s a simple fix: move them off the main request thread. By handling time-intensive tasks asynchronously, you can keep your API feeling snappy, even when heavier operations are running in the background.

Offload Time-Intensive Tasks

Tasks like file processing, data transformations, or third-party API calls can take a lot of time to complete. Instead of making the user wait, push those tasks into a background queue. Tools like RabbitMQ, Amazon SQS, BackgroundDispatcher, and BackgroundLoader make this super easy. Your API can return a quick response, while the backend does the heavy lifting behind the scenes. That way, users don’t experience any lag, and throughput stays high.

Resilience with Asynchronous Processing

Asynchronous processing adds resilience to your API. This means that if a background job fails, it won’t affect the user experience. The main request flow keeps running smoothly, and you can replay or handle the failed job later without missing a beat.

4. Implement Connection Pooling

Opening and closing connections for every request is like starting a car from scratch every time you want to drive. Connection pooling keeps a reserve of pre-established connections, so your API doesn’t have to waste time setting up and tearing down connections.

Reuse Database Connections

For high-traffic APIs, establishing new database connections can be a huge resource drain. By using connection pooling, each request can grab a pre-opened connection, avoiding that setup cost. Once the request is done, the connection returns to the pool to be reused. This dramatically reduces connection overhead and boosts throughput.

Optimize Pool Size for Maximum Efficiency

Having too few connections means requests will have to wait for an available one, which kills throughput. Too many connections, and you’re wasting resources. It’s all about finding that sweet spot—monitor your concurrency levels and adjust the pool size as needed. Properly tuning your pool size is an ongoing task that can be based on your usage patterns and traffic spikes.

5. Use Compression to Reduce Payload Size

Compressing data before sending it over the wire can make a huge difference, especially when you’re dealing with large payloads or limited bandwidth. Smaller data = faster transfers.

Implement GZIP or Brotli Compression

GZIP is widely supported and provides solid compression speeds. Brotli, on the other hand, can compress text-based payloads even more efficiently (though check that your clients support it). The result? Smaller payloads, quicker transfer times, and a smoother user experience.

Balance Compression with Overhead

Compression doesn’t come without a cost. The server has to compress the data, and the client has to decompress it. This extra CPU time is worth it when you're dealing with large datasets, but if the payload is small or the data varies frequently, the overhead might not justify the speed boost. Consider a dynamic approach to compression based on the size and type of data you're sending.

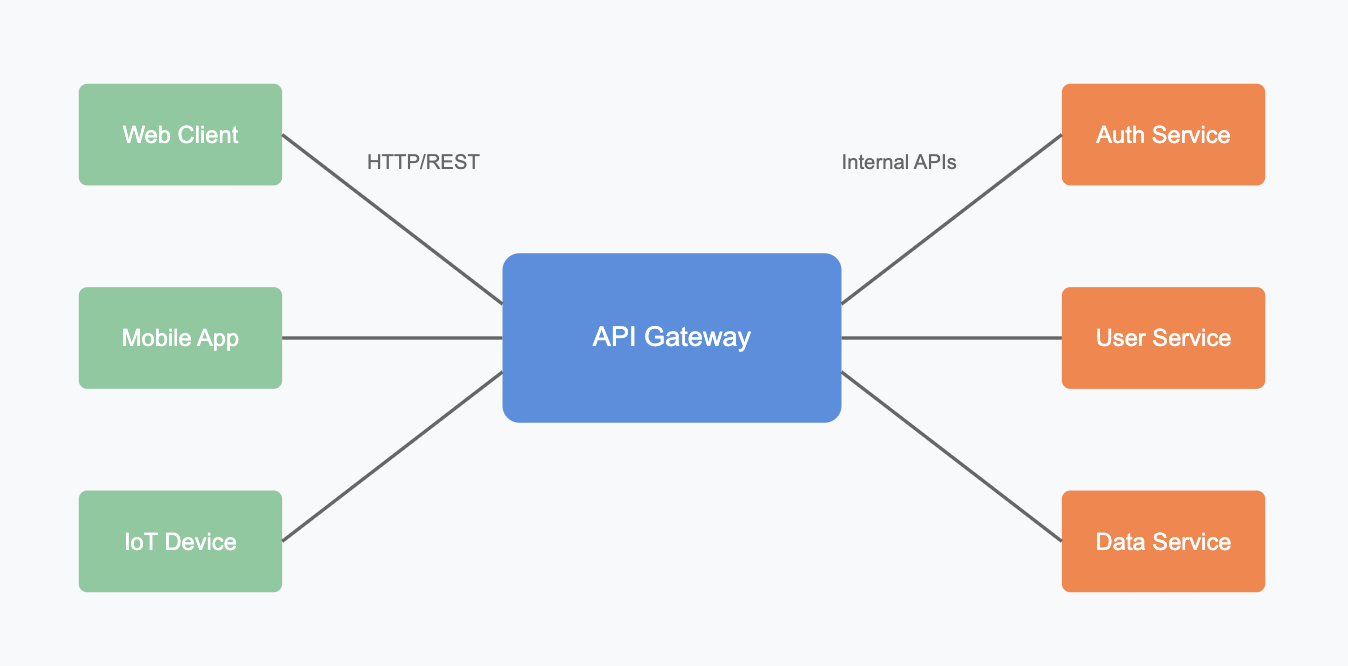

6. Use API Gateway for Load Balancing and Security

API Gateways are powerful tools for optimizing throughput in distributed

systems. They help with everything from routing and load balancing to providing

an extra layer of security. By leveraging essential

API Gateway features, you can

enhance your API's performance and security.

Load Balancing with API Gateway

An API Gateway can intelligently distribute incoming traffic to multiple instances or servers. By offloading tasks like authentication and rate limiting to the gateway, your back-end services can focus on the important stuff—data processing and business logic. This helps improve both performance and security. Utilizing a hosted API gateway offers additional benefits, such as ease of maintenance and scalability.

Enhance Security

API Gateways also act as a barrier between your API and the outside world. They can help protect your services from malicious attacks, like DDoS, by filtering traffic before it reaches your servers. By leveraging your gateway’s security features, you can ensure your API is safeguarded against unauthorized access and potential threats.

7. Optimize Database Queries and Indexing

APIs often rely heavily on databases, so optimizing how you interact with your database can have a big impact on throughput. Slow queries and missing indexes can bottleneck performance, but a few key adjustments can make a world of difference.

Optimize Queries

Avoid inefficient operations like complex joins or poorly written SELECT statements. Use query profiling tools to pinpoint and eliminate bottlenecks and ensure your queries are as efficient as possible. Consider caching frequently requested queries to minimize the risk of database hits and reduce load.

Indexing

Make sure the fields that are queried frequently are indexed. This can drastically reduce the time it takes to retrieve data. But—word of caution—be careful not to over-index, as that can add overhead to data writes. Balanced indexing will strike the right balance between read and write efficiency.

8. Use Microservices for Decoupling and Scaling

For larger applications, splitting your API into microservices can significantly improve throughput. By decoupling services, you can optimize and scale each one independently.

Benefits of Microservices

Microservices reduce the likelihood of bottlenecks. If one service is underperforming, you can scale it without affecting the entire system. You can also choose specialized technologies for each service, such as using a NoSQL database for high-throughput services and a relational database for transactional tasks. Additionally, tools that generate APIs from databases can help speed up development and simplify integrations.

Fine-Grained Control

Microservices let you apply optimizations to specific parts of your application, giving you more granular control over performance. This flexibility can significantly improve throughput across the entire system.

Monitoring and Continuous Optimization

Even after implementing all of these optimizations, your work isn't over. API performance is a moving target, and without continuous monitoring, it's easy for small inefficiencies to creep back in over time. By integrating proper monitoring tools and performance analytics, you can ensure your API stays in top shape, even as user behavior or traffic patterns change.

Leverage Monitoring Tools

Tools like Prometheus, Grafana, or New Relic can help track key metrics such as response times, throughput, and error rates. Set up alerts to notify you when a metric crosses a threshold, signaling that something may need attention. For example, if your API's response time spikes above a certain threshold, you'll know right away and can investigate.

Track Performance Trends

Rather than just watching metrics in real time, it’s helpful to track trends over time. Look for patterns in your throughput data to understand when traffic is highest and when your API faces the most load. This information can inform decisions on scaling or adjusting resources before issues arise.

Keep an Eye on Error Rates

Error rates are one of the most critical indicators of API health. By setting up alerts for increased error rates, you can quickly identify failing endpoints and begin investigating the root cause before it escalates.

Refine Based on Data

Make data-driven decisions when refining your API. For instance, you can make targeted improvements if you notice a high error rate at specific endpoints or excessive latency in a particular region. You'll know which areas to optimize for maximum impact by constantly evaluating performance metrics.

Load Testing On a Regular Basis

Load testing shouldn't be a one-time thing. Regular testing using tools like JMeter or Apache Bench helps simulate heavy traffic and identify weak spots that could hinder throughput. It's an essential part of an ongoing optimization process.

Unlocking the Full Potential of Your API

Bottlenecks in throughput often come down to code efficiency, resource management, and smart use of techniques like caching and connection pooling. Identifying performance issues through targeted profiling helps you prioritize fixes that yield the biggest gains. Caching and compression cut the repeated work servers perform, while asynchronous processing offloads heavy jobs so the main request flow can stay quick. Connection pooling then reduces the overhead of spinning up connections, and well-sized pools keep everything running smoothly during traffic spikes.

All these methods work best as part of an ongoing optimization cycle. Load testing with tools like JMeter helps you see how changes play out under realistic conditions. By refining endpoints, reducing round trips, and ensuring each request is handled as efficiently as possible, you can optimize API performance and create APIs that are fast, reliable, and ready for growth. By investing in these strategies, you'll be able to increase API performance, providing a faster, more reliable API experience for your users, and that’s a win for everyone. 👏 Looking to boost your API throughput and performance? Explore Zuplo's API optimization solutions for tailored strategies and seamless integrations. Get started today!